|

This document is under active development and discussion!

If you find errors or omissions in this document, please don’t hesitate to

submit an issue or

open a pull request with

a fix. We also encourage you to ask questions and discuss any aspects of the project on the

mailing lists or IRC.

New contributors are always welcome!

|

Preface

This guide covers Apache Syncope services for:

-

identity management, provisioning and compliance;

-

access management, single sign-on, authentication and authorization;

-

API gateway, secure proxy, service mesh, request routing.

1. Introduction

Apache Syncope is an Open Source system for managing digital identities in enterprise environments, implemented in Java EE technology and released under the Apache 2.0 license.

Often, Identity Management and Access Management are jointly referred, mainly because their two management worlds likely coexist in the same project or in the same environment.

The two topics are however completely different: each one has its own context, its own rules, its own best practices.

On the other hand, some products provide unorthodox implementations so it is indeed possible to do the same thing with both of them.

From the definitions above, Identity Management and Access Management can be seen as complementary: very often, the data synchronized by the former are then used by the latter to provide its features - e.g. authentication and authorization.

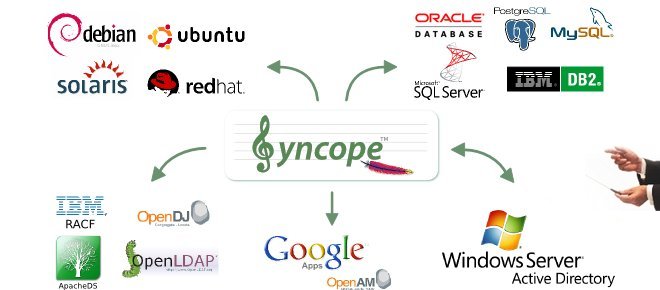

1.1. Identity Technologies

Identity and Access Management (IAM) is not implemented by a single technology; it is instead a composition of heterogeneous technologies - differing by maturity, scope, applicability and feature coverage - which require some 'glue' to fit together.

As with other application domains, it can be observed that tools that appeared earlier tend to partially overlap with more recent, targeted products.

1.1.1. Identity Stores

Identity Stores are the places where identity-related information is stored.

An Identity Store can be shared among several systems: as a result, there is a single place where account data is managed by administrators, and the same password can be used for the same user for accessing different applications.

Various Identity Store types are available:

-

Flat files (XML, CSV, …)

-

LDAP

-

Relational databases (MySQL, Oracle, Microsoft SQL Server, PostgreSQL, …)

-

Platform-specific (Microsoft Active Directory, FreeIPA, PowerShell, …)

-

Web services (REST, SOAP, …)

-

Cloud providers

-

…and much more.

|

Aren’t Identity Stores enough?

One might suppose that a single Identity Store can solve all the identity needs inside an organization, but there are a few drawbacks with this approach:

|

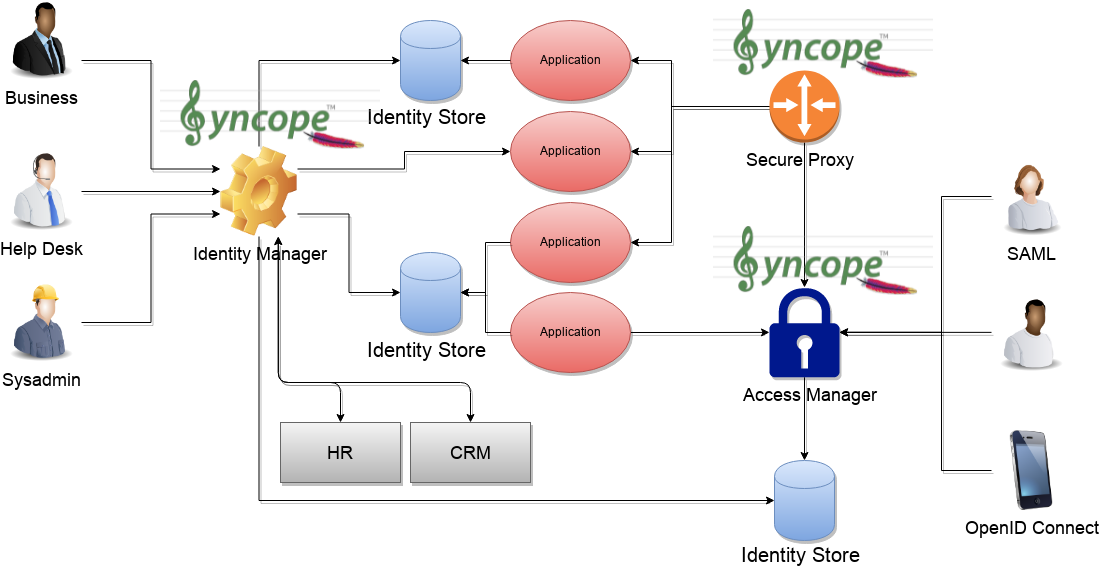

1.1.2. Identity Managers

The main role of Identity Managers is to keep Identity Stores synchronized as much as possible.

Some other characteristics and features provided:

-

Adapt to Identity Store data and application models

-

Do not require changes in Identity Stores or applications

-

Build virtual unified view of identity data distributed across several Identity Stores

-

Allow to define and enforce security policies

-

Permit workflow definition, with transitions subject to approval

-

Focused on application back-end

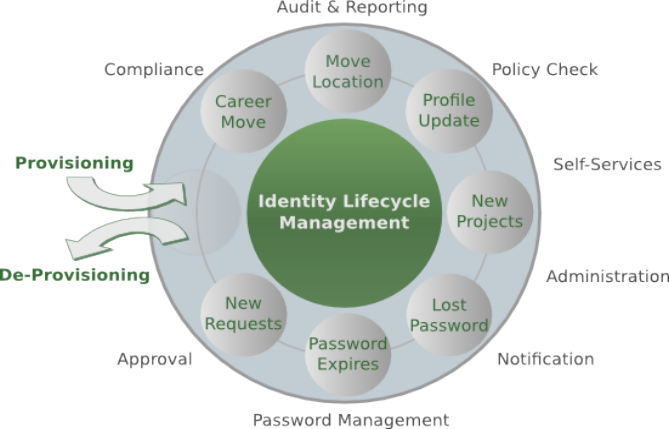

In brief, Identity Managers take heterogeneous Identity Stores (and business requirements) as input and build up high-level identity data management throughout what is called the Identity Lifecycle.

|

Applications can typically integrate with Identity Managers by:

|

1.1.3. Access Managers

Access Managers focus on the application front-end, enforcing application access via authentication (how users are let access a given system) and authorization (which capabilities each user owns on a given system).

Several practices and standards can be implemented by Access Managers:

|

Applications can typically integrate with Access Managers by:

|

1.1.4. The Complete Picture

The picture below shows a typical scenario where an organization’s infrastructure is helped by identity technologies in providing secure and trusted application access to end-Users, while keeping different levels of data and processes under control for business owners, help-desk operators and system administrators.

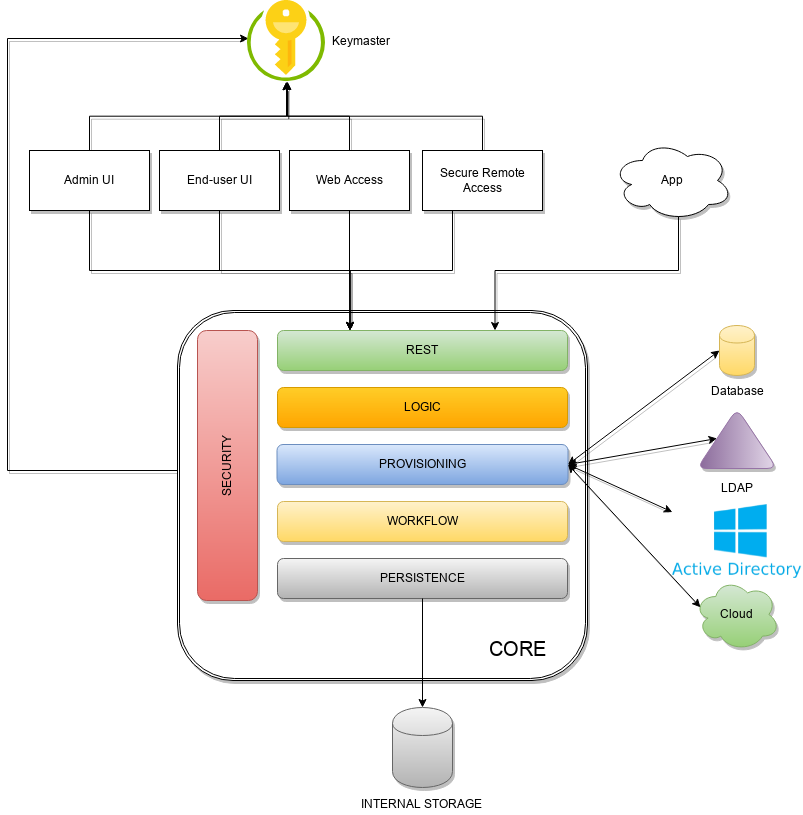

2. Architecture

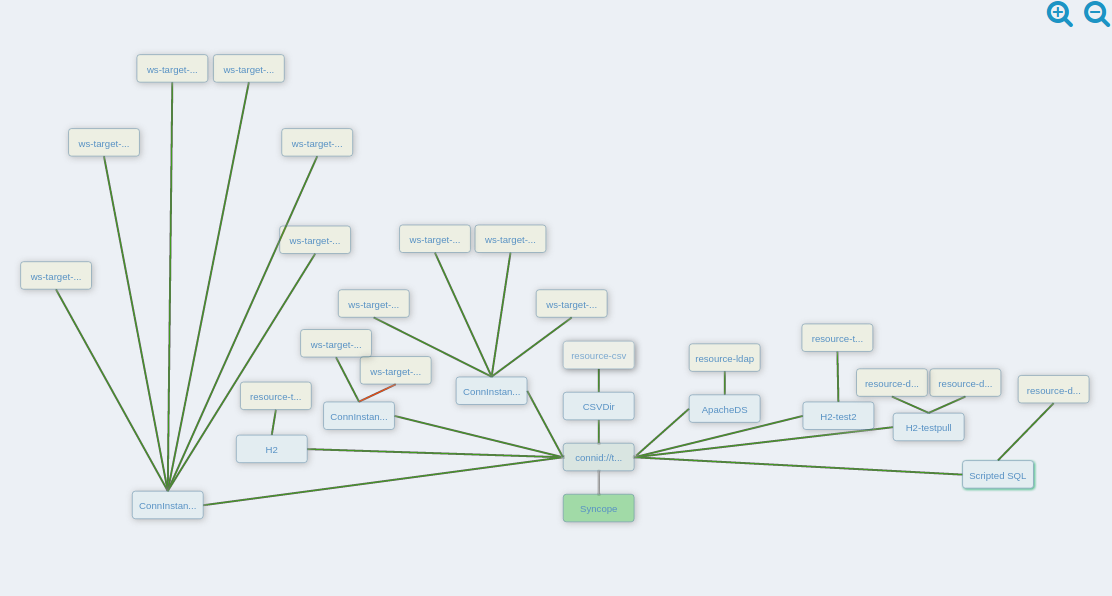

Apache Syncope is made of several components, which are logically summarized in the picture below.

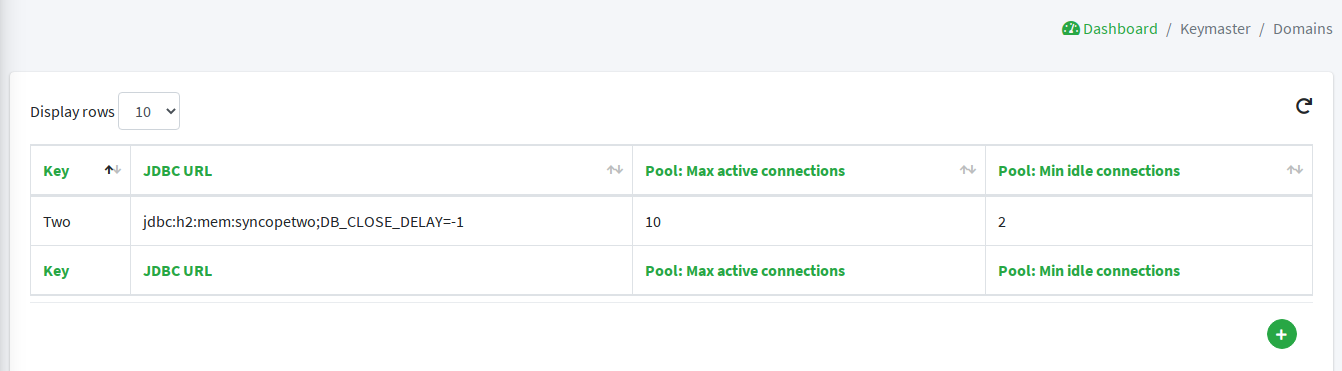

2.1. Keymaster

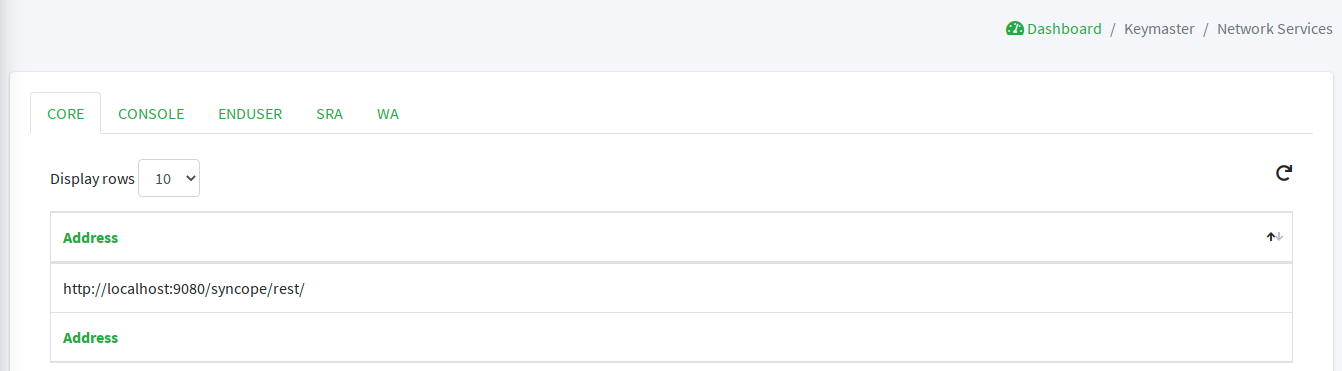

The Keymaster allows for dynamic service discovery so that other components are able to find each other.

On startup, all other component instances will register themselves into Keymaster so that their references

can be found later, for intra-component communication.

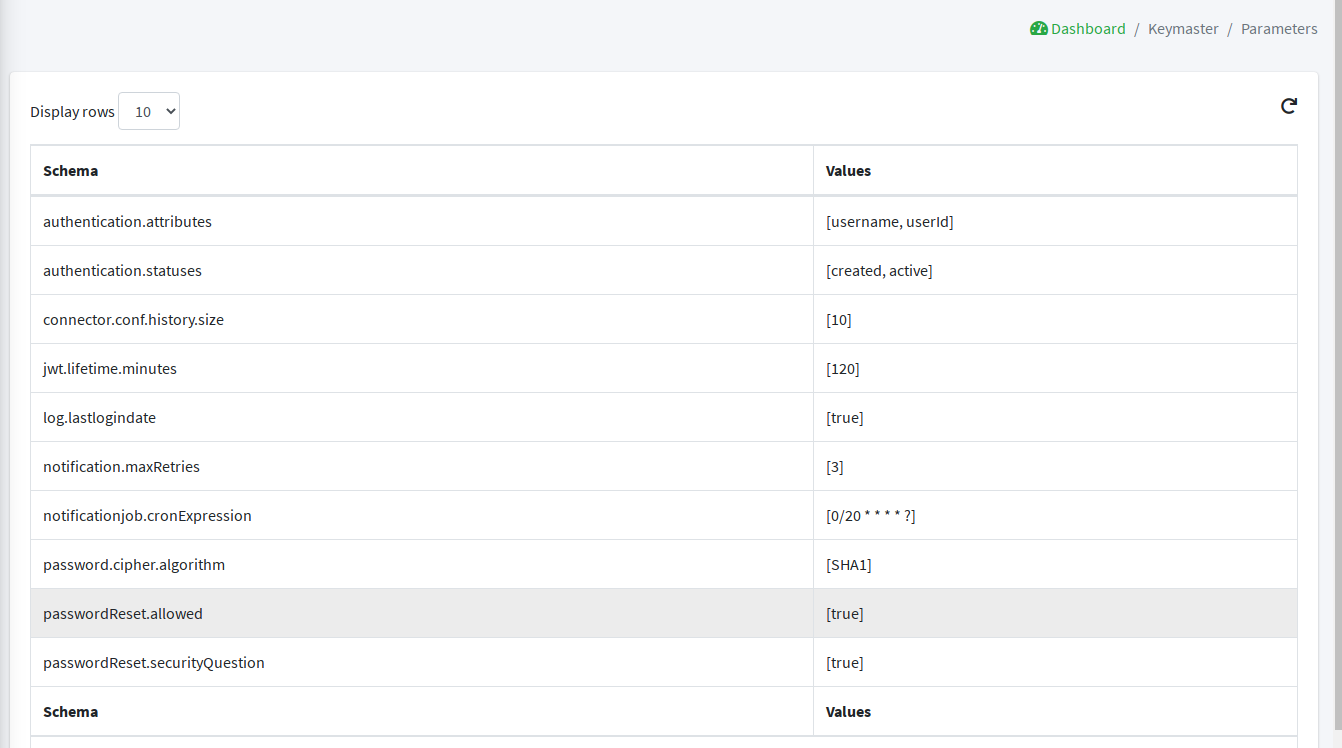

In addition, the Keymaster is also used as key / value store for configuration parameters and as a directory for defined domains.

Two different implementations are provided, following the actual needs:

-

as an additional set of RESTful services exposed by the Core, for traditional deployments (also known as Self Keymaster);

-

as a separate container / pod based on Apache Zookeeper, for microservice-oriented deployments.

2.2. Core

The Core is the component providing IdM services and acting as central repository for other components' configuration.

The Core is internally further structured into several layers, each one taking care of specific aspects of the identity management services.

2.2.1. REST

The primary way to consume Core services is the RESTful interface, which enables full access to all the features provided. This interface enables third-party applications, written in any programming language, to consume IdM services.

The rich pre-defined set of endpoints can be extended by adding new ones, which might be needed on a given Apache Syncope deployment to complement the native features with domain-specific operations.

At a technical level, the RESTful interface is a fully-compliant JAX-RS 2.1 implementation based on Apache CXF, natively dealing either with JSON, YAML and XML payloads.

More details are available in the dedicated usage section.

2.2.2. Logic

Right below the external interface level, the overall business logic is responsible for orchestrating the other layers, by implementing the operations that can be triggered via REST services. It is also responsible for controlling some additional features (notifications, reports and auditing).

2.2.3. Provisioning

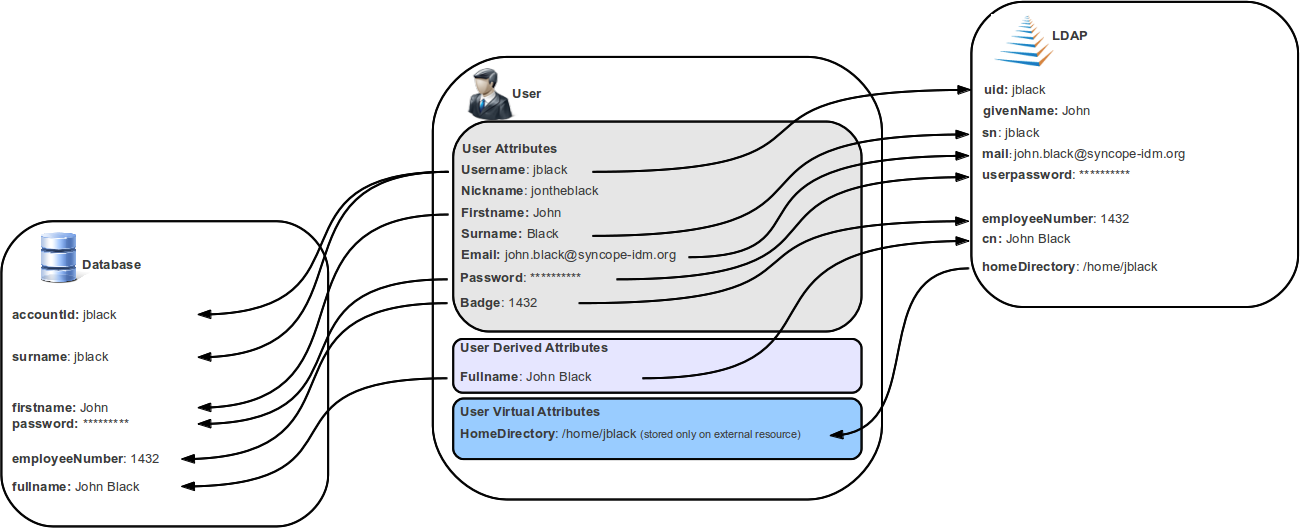

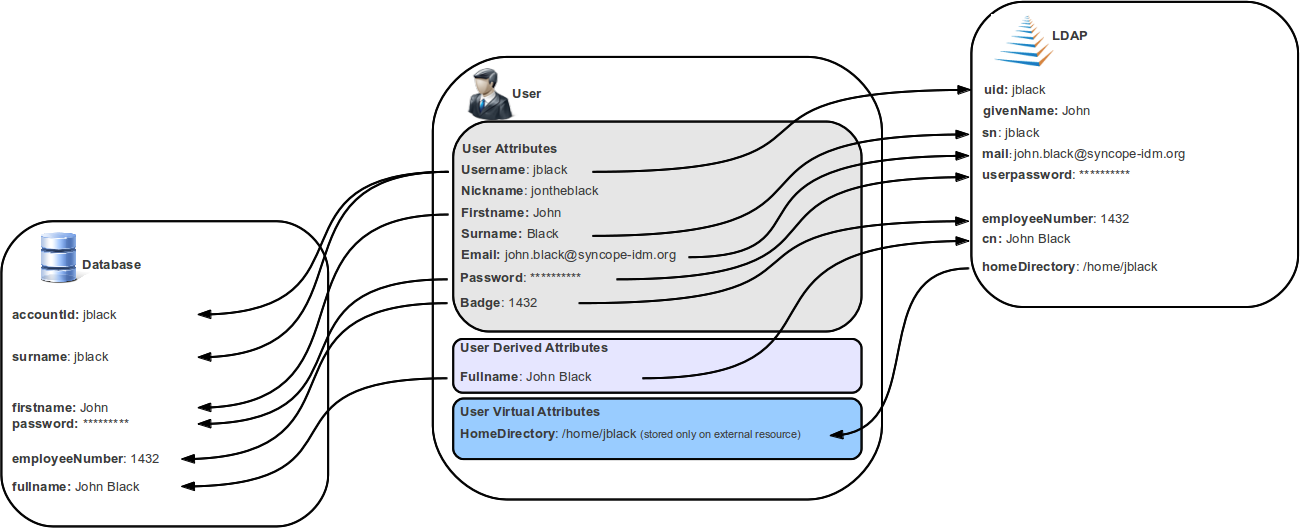

The Provisioning layer is involved with managing the internal (via workflow) and external (via specific connectors) representation of Users, Groups and Any Objects.

One of the most important features provided is the mapping definition: internal data (Users, for example)

representation is correlated with information available on the available Identity Stores.

Such definitions constitute the pillars of inbound (pull) and outbound (propagation / push)

provisioning.

The default implementation can be sometimes tailored to meet the requirements of a specific deployment, as it is the crucial decision point for defining and enforcing the consistency and transformations between internal and external data.

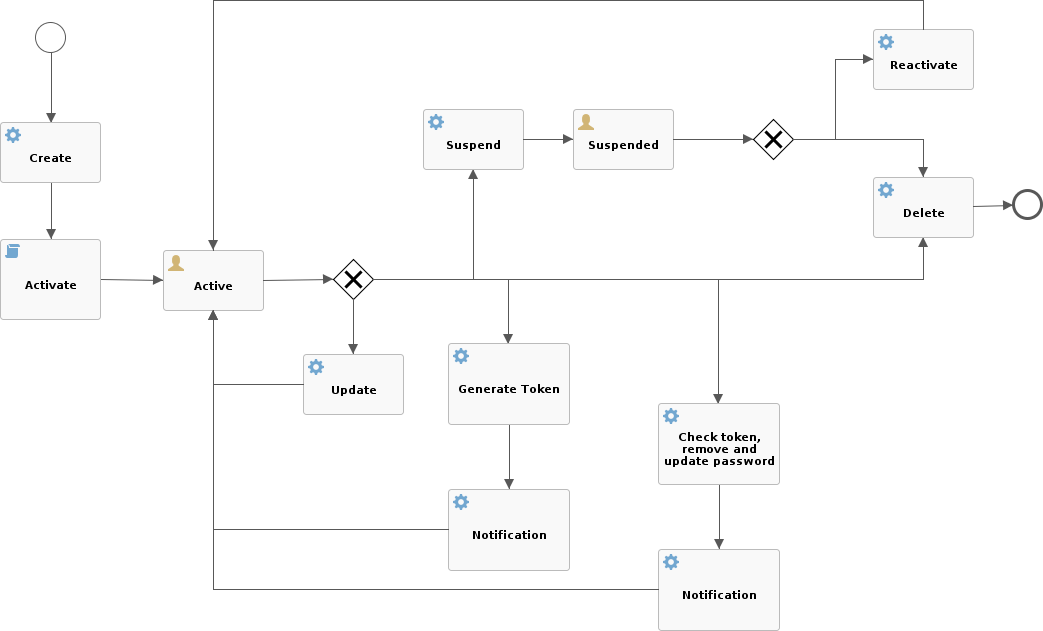

2.2.4. Workflow

The Workflow layer is responsible for managing the internal lifecycle of Users, Groups and Any Objects.

Besides the default engine, another engine is available based on Flowable, the reference open source BPMN 2.0 implementation. It enables advanced features such as approval management and new statuses definitions; a web-based GUI editor to model workflows and user requests is also available.

2.2.5. Persistence

All data (users, groups, attributes, resources, …) is internally managed at a high level using a standard JPA 2.2 approach based on Apache OpenJPA. The data is persisted into an underlying database, referred to as Internal Storage. Consistency is ensured via the comprehensive transaction management provided by the Spring Framework.

Globally, this offers the ability to easily scale up to a million entities and at the same time allows great portability with no code changes: MySQL, MariaDB, PostgreSQL, Oracle and MS SQL Server are fully supported deployment options.

2.2.6. Security

Rather than being a separate layer, Security features are triggered throughout incoming request processing.

A fine-grained set of entitlements is defined which can be granted to administrators, thus enabling the implementation of delegated administration scenarios.

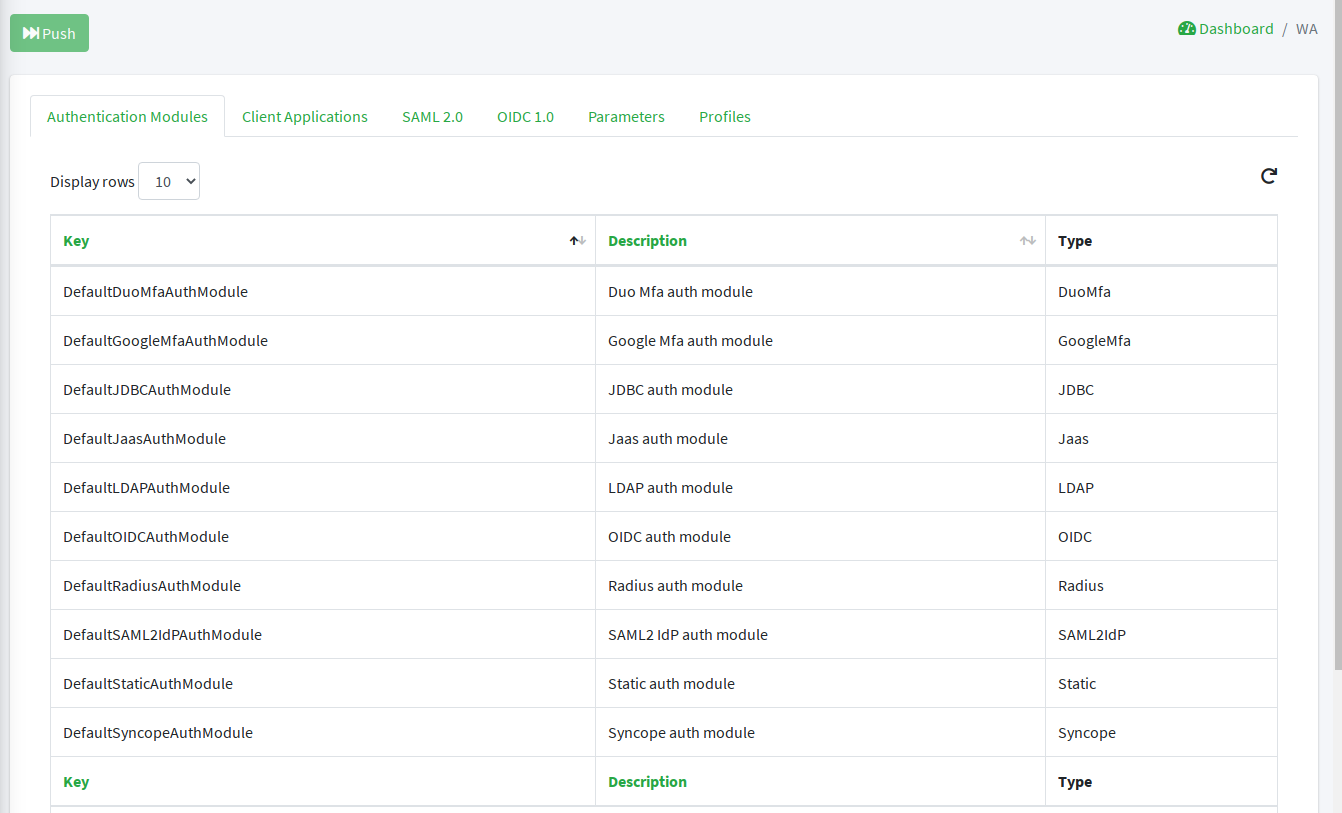

2.3. Web Access

The Web Access component is based on Apereo CAS.

In addition to all the configuration options and features from Apereo CAS, the Web Access is integrated with Keymaster, Core and Admin UI to offer centralized configuration and management.

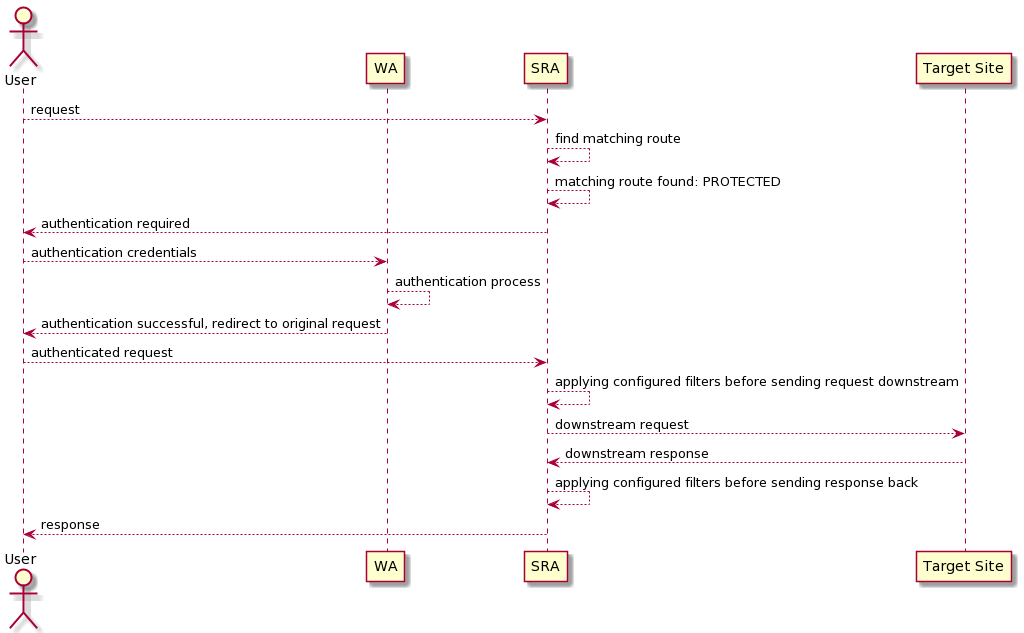

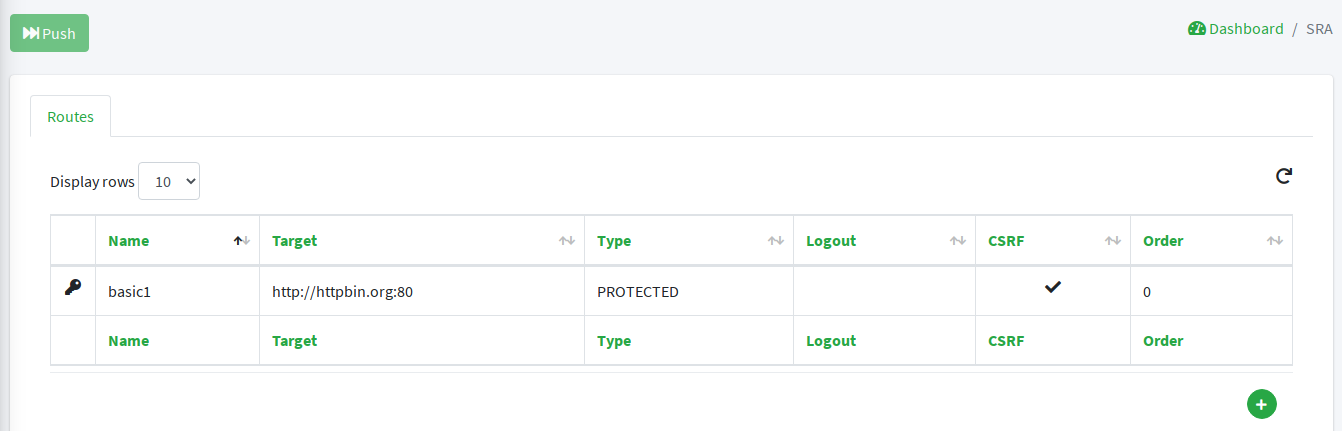

2.4. Secure Remote Access

The Secure Remote Access component is built on Spring Cloud Gateway.

In addition to all the configuration options and features from Spring Cloud Gateway, the Secure Remote Access is integrated with Keymaster, Core and Admin UI to offer centralized configuration and management.

The Secure Remote Access allows to protect legacy applications by integrating with the Web Access or other third-party Access Managers implementing standard protocols as OpenID Connect or SAML.

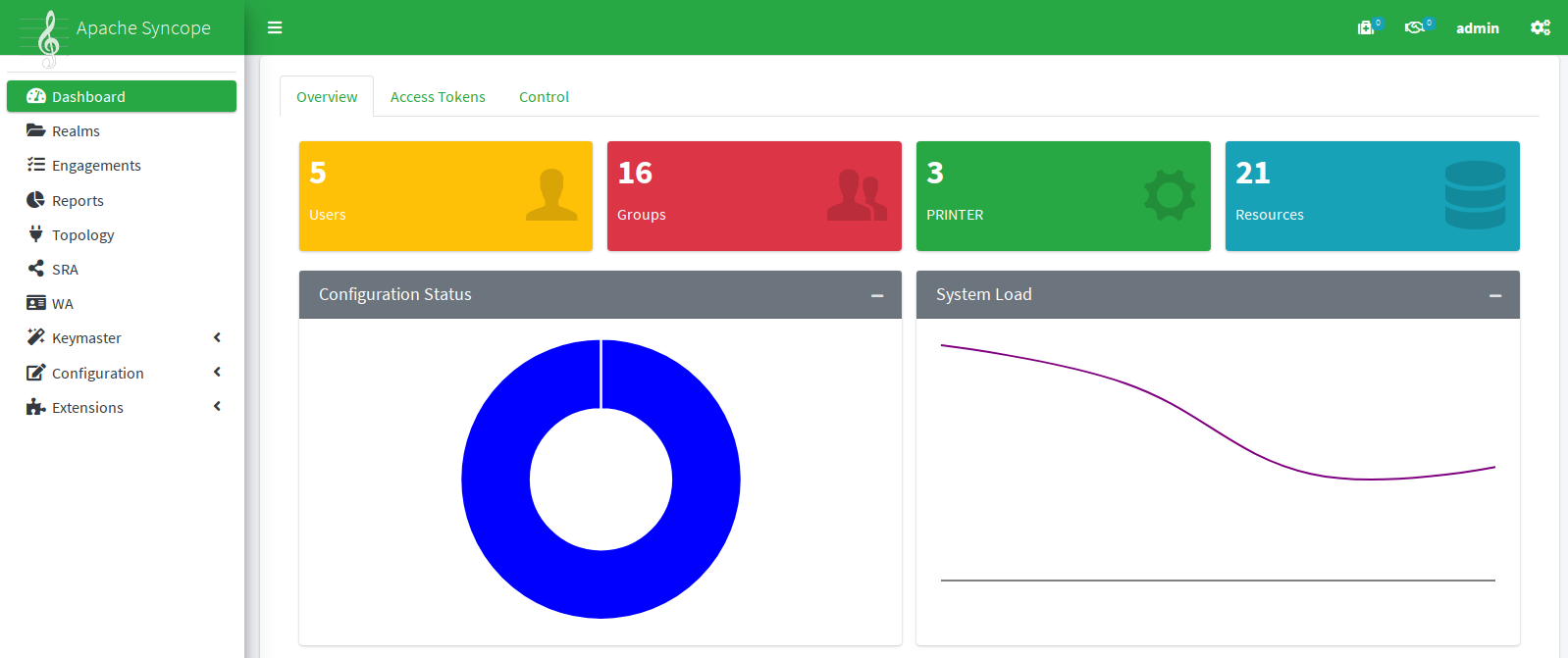

2.5. Admin UI

The Admin UI is the web-based console for configuring and administering running deployments, with full support for delegated administration.

The communication between Admin UI and Core is exclusively REST-based.

More details are available in the dedicated usage section.

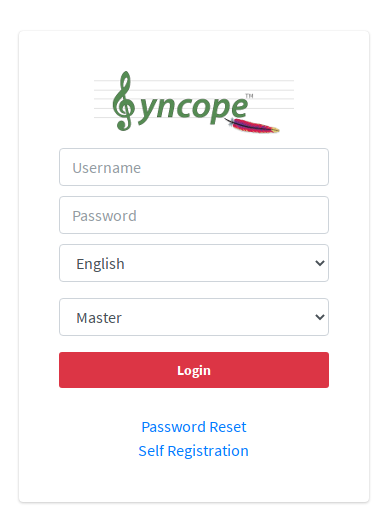

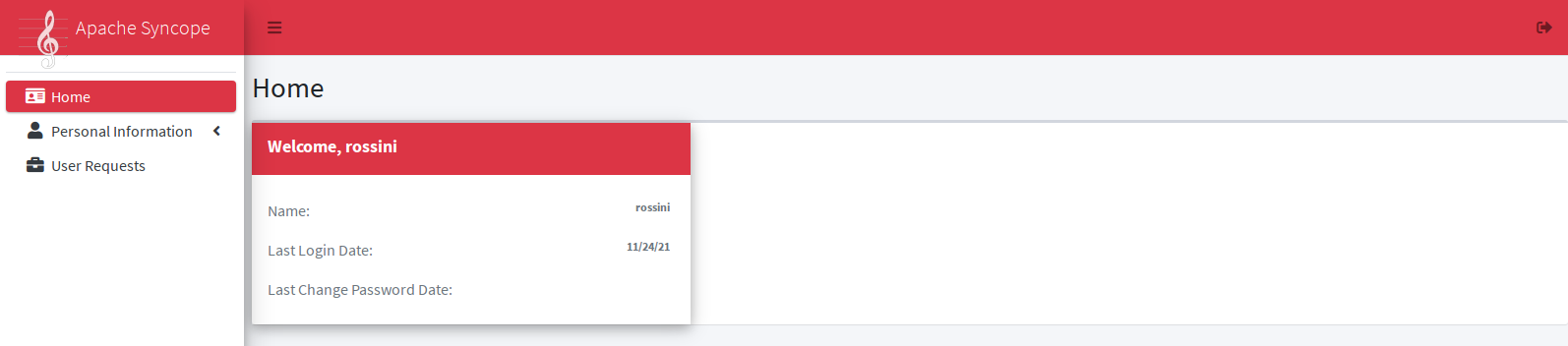

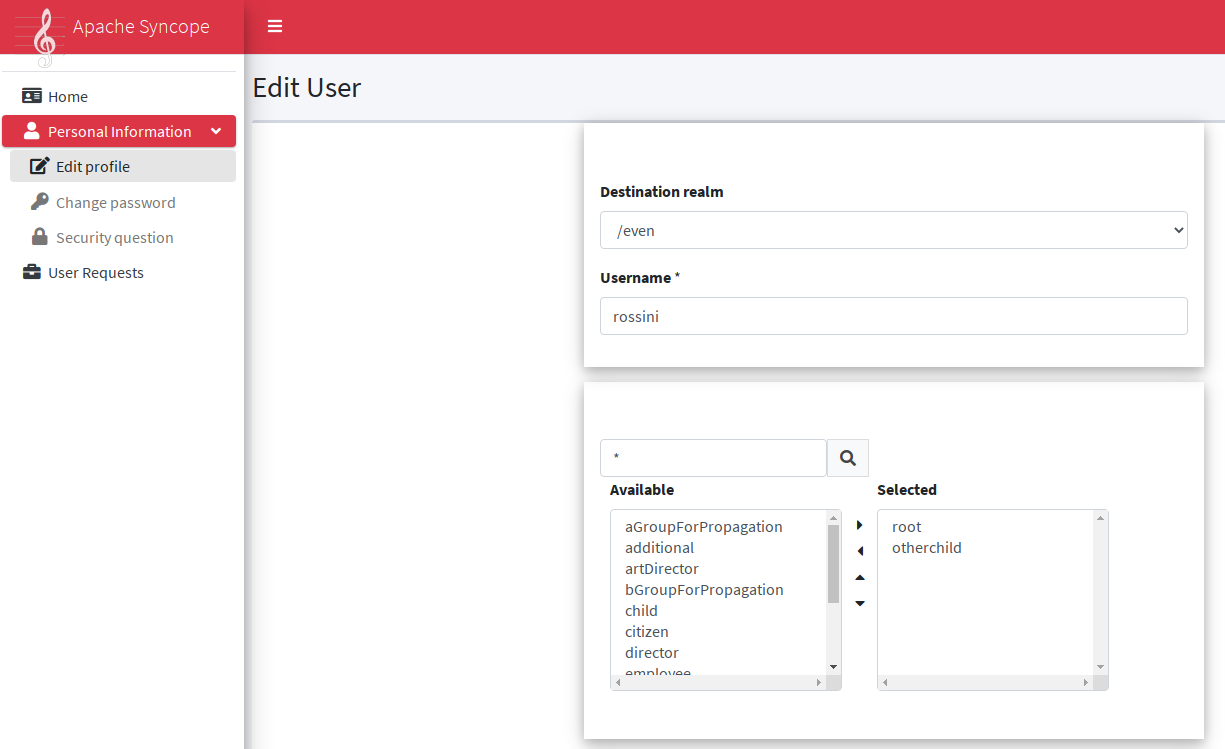

2.6. End-user UI

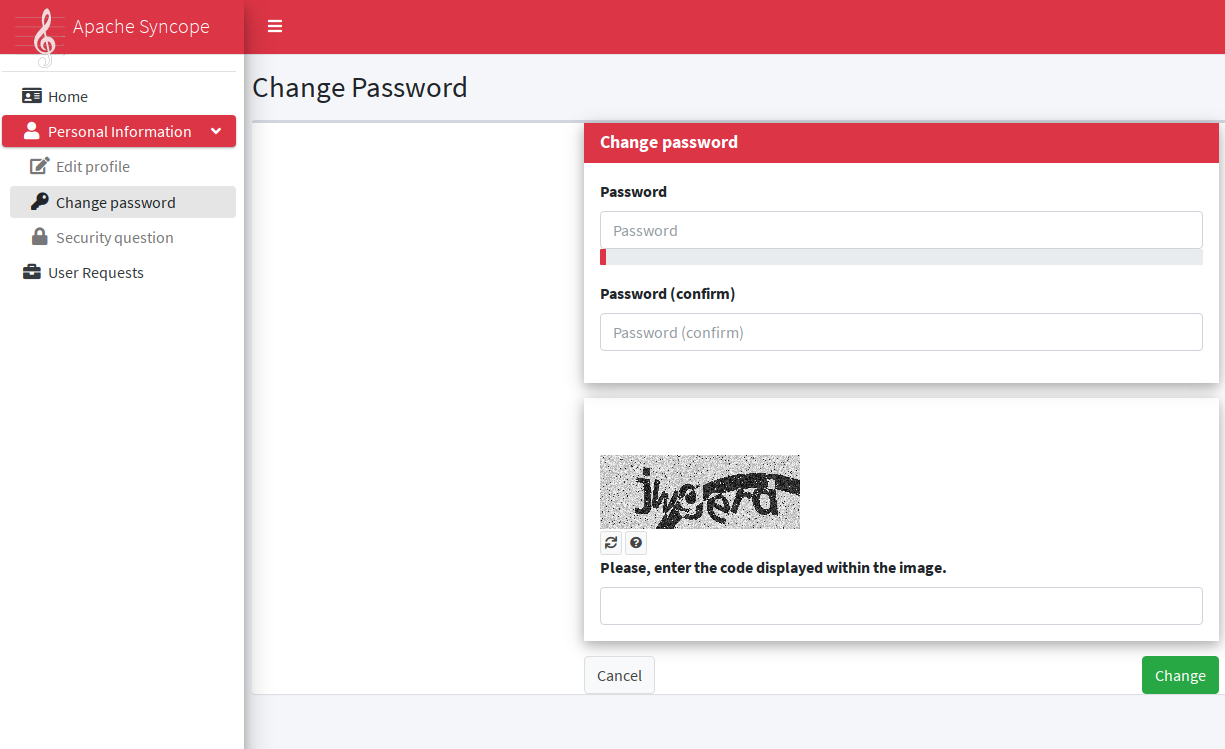

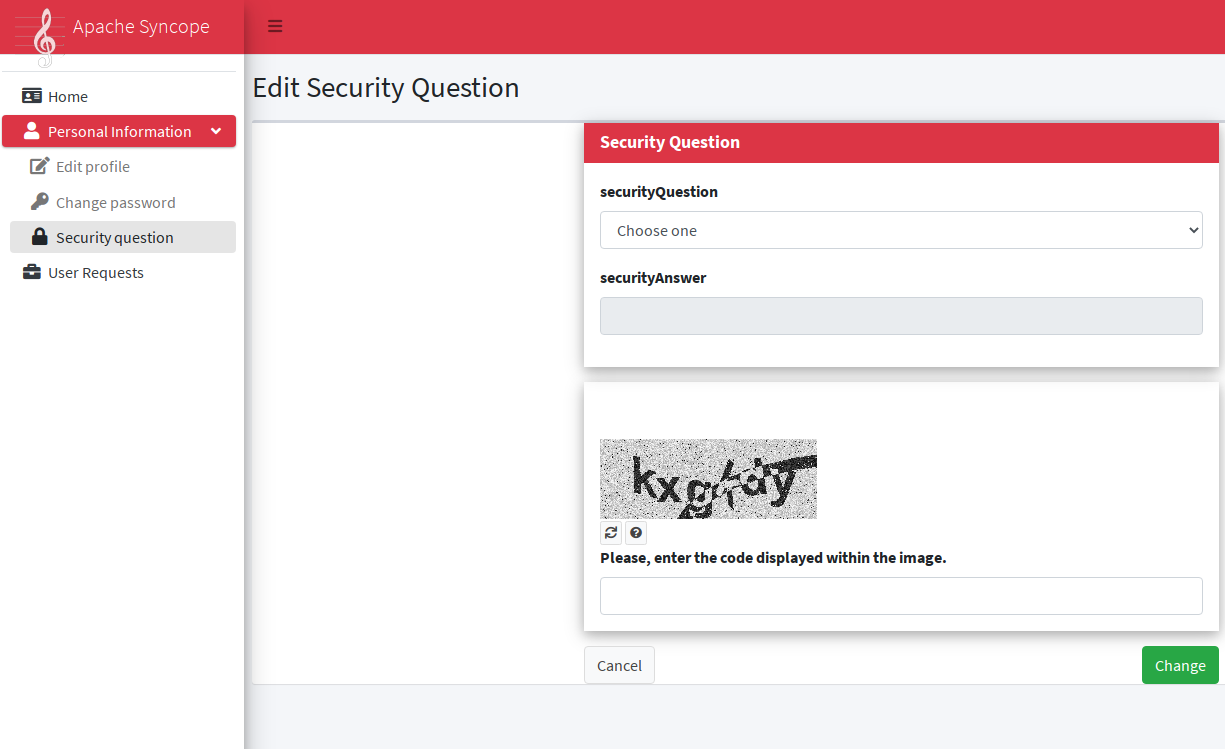

The End-user UI is the web-based application for self-registration, self-service and password reset.

The communication between End-user UI and Core is exclusively REST-based.

More details are available in the dedicated usage section.

2.7. Third Party Applications

Third-party applications are provided full access to IdM services by leveraging the REST interface, either via the Java Client Library (the basis of Admin UI and End-user UI) or plain HTTP calls.

3. Concepts

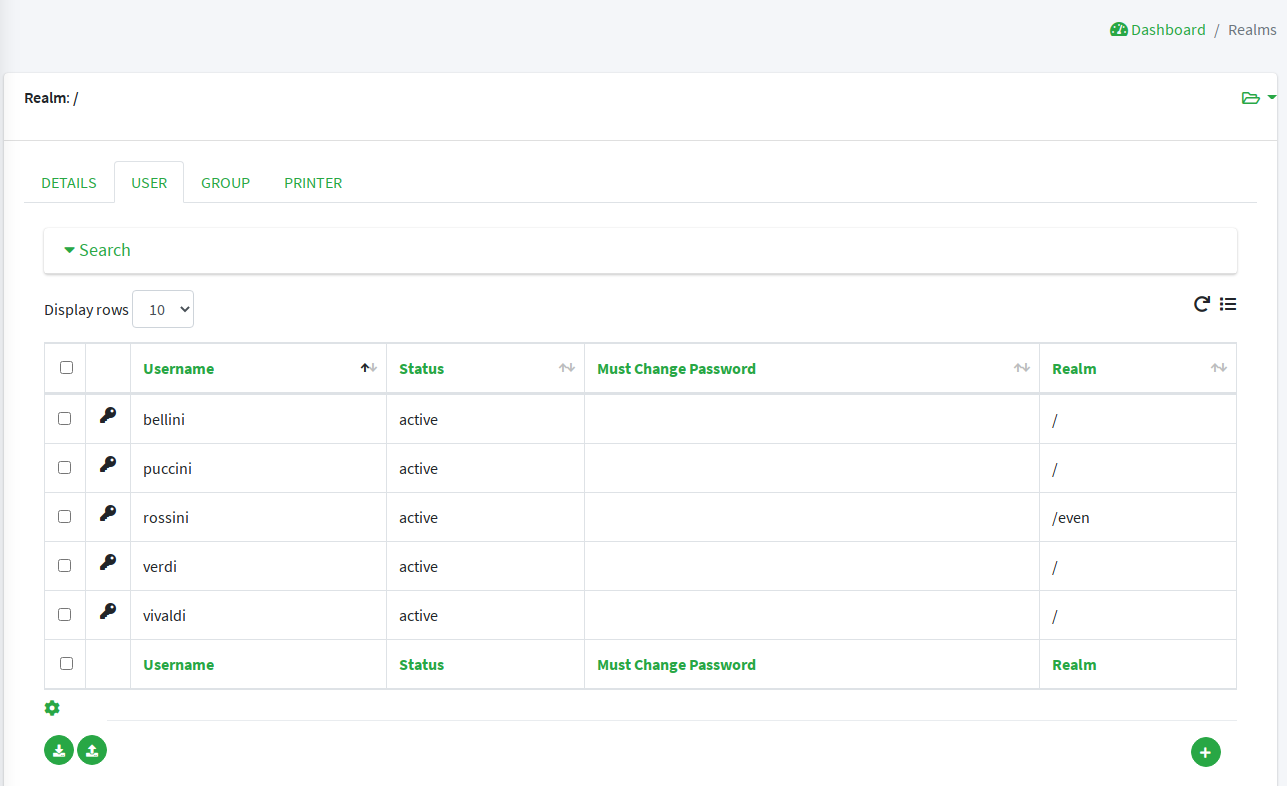

3.1. Users, Groups and Any Objects

Users, Groups and Any Objects are definitely the key entities to manage: as explained above in fact, the whole identity management concept is literally about managing identity data.

The following identities are supported:

-

Users represent the virtual identities build up of account information fragmented across the associated external resources

-

Groups have the dual purpose of representing entities on external resources supporting this concept (say LDAP or Active Directory) and putting together Users or Any Objects for implementing group-based provisioning, e.g. to dynamically associate Users or Any Objects to external resources

-

Any Objects actually cover very different entities that can be modeled: printers, services, sensors, …

For each of the identities above, Apache Syncope is capable of maintaining:

-

name(username, for Users) - string value uniquely identifying a specific user, group or any object instance; -

password(Users only) - hashed or encrypted value, depending on the selectedpassword.cipher.algorithm- see below for details - which can be used for authentication; -

set of attributes, with each attribute being a

(key,values)pair where-

keyis a string label (e.g.surname); -

valuesis a (possibly singleton) collection of data (e.g.[Doe]but also[john.doe@syncope.apache.org, jdoe@gmail.com]) ; the type of values that can be assigned to each attribute is defined via the schema matching thekeyvalue (e.g. plain, derived and virtual);

-

-

associations with external resources, for provisioning.

|

Which schemas can be populated for a given user / group / any object?

Each user / group / any object will be able to hold values for all schemas:

|

Moreover, Users and Any Objects can be part of Groups, or associated to other any objects.

|

Memberships and Relationships

When an user or an any object is assigned to a group, a membership is defined; the (static) members of a group benefit from type extensions. When an user or an any object is associated to another any object, a relationship is defined, of one of available relationship types. |

|

Static and Dynamic Memberships

Users and Any Objects are statically assigned to Groups when memberships are explicitly set. With group definition, however, a condition can be expressed so that all matching Users and Any Objects are

dynamic members of the group. |

|

Security Questions

The password reset process can be strengthened by requesting users to provide their configured answer to a given security question, chosen among the ones defined. |

3.2. Type Management

In order to manage which attributes can be owned by Users, Groups and any object, and which values can be provided, Apache Syncope defines a simple yet powerful type management system, vaguely inspired by the LDAP/X.500 information model.

3.2.1. Schema

A schema instance describes the values that attributes with that schema will hold; it can be defined plain, derived or virtual.

It is possible to define i18n labels for each schema, with purpose of improving presentation with Admin and End-user UIs.

Plain

Values for attributes with such schema types are provided during user, group or any object create / update.

When defining a plain schema, the following information must be provided:

-

Type

-

String -

Long- allows to specify a conversion pattern to / from string, according to DecimalFormat -

Double- allows to specify a conversion pattern to / from string, according to DecimalFormat -

Boolean -

Date- allows to specify a conversion pattern to / from string, according to DateFormat -

Enum-

enumeration values (mandatory)

-

enumeration labels (optional, values will be used alternatively)

-

-

Encrypted-

secret key (stored or referenced as Spring property)

-

cipher algorithm

-

whether transparent encryption is to be enabled, e.g. attribute values are stored as encrypted but available as cleartext on-demand (requires AES ciphering)

-

-

Binary- it is required to provide the declared mime type

-

-

Validator class - (optional) Java class validating the value(s) provided for attributes, see EmailAddressValidator for reference

-

Mandatory condition - JEXL expression indicating whether values for this schema must be necessarily provided or not; compared to simple boolean value, such condition allows to express complex statements like 'be mandatory only if this other attribute value is above 14', and so on

-

Unique constraint - make sure that no duplicate value(s) for this schema are found

-

Multivalue flag - whether single or multiple values are supported

-

Read-only flag - whether value(s) for this schema are modifiable only via internal code (say workflow tasks) or can be instead provided during ordinary provisioning

Derived

Sometimes it is useful to obtain values as arbitrary combinations of other attributes' values: for example, with

firstname and surname plain schemas, it is natural to think that fullname could be somehow defined as the

concatenation of firstname 's and surname 's values, separated by a blank space.

Derived schemas are always read-only and require a JEXL

expression to be specified that references plain schema types.

For the sample above, it would be

firstname + ' ' + surname

With derived attributes, values are not stored into the internal storage but calculated on request, by evaluating the related JEXL expression

Virtual

Virtual attributes are somehow linked from Identity Stores rather than stored internally.

The typical use case is when attribute values can change in the Identity Store without notice, and it is required to always have access to the most recent values that are available.

It can also be said that virtual schemas are for attributes whose ownership is not that of Syncope but of an Identity Store; the external resources for such Identity Stores are said to be the linking resources.

| As best practice, only attributes for which Apache Syncope retains ownership should be modeled as plain attributes; attributes for which Apache Syncope does not retain ownership should be modeled as virtual instead. |

When defining a virtual schema, the following information must be provided:

-

External resource - linking resource

-

External attribute - attribute to be linked on the external resource

-

Any Type - reference any type on the external resource

-

Read-only flag - whether the external attribute value(s) for this schema can only be read, or whether they can be written to as well

3.2.2. AnyTypeClass

Any type classes are aggregations of plain, derived and virtual schemas, provided with unique identifiers.

Classes can be assigned to any types and are also available as auxiliary (hence to be specified on a given user / group / any object instance) and for type extensions.

3.2.3. AnyType

Any types represent the type of identities that Apache Syncope is able to manage; besides the predefined USER and

GROUP, more types can be created to model workstations, printers, folders, sensors, services, …

For all Any Types that are defined, a set of classes can be selected so that instances of a given Any Type will be enabled to populate attributes for schemas in those classes.

Assuming that the following schemas are available:

-

plain:

firstname,surname,email -

derived:

fullname -

virtual:

enrollment

and that the following Any Type classes are defined:

-

minimal- containingfirstname,surnameandfullname -

member- containingemailandenrollment

and that the USER Any Type has only minimal assigned, then the following Users are valid (details are simplified to

increase readability):

{

"key": "74cd8ece-715a-44a4-a736-e17b46c4e7e6",

"type": "USER",

"realm": "/",

"username": "verdi",

"plainAttrs": [

{

"schema": "surname",

"values": [

"Verdi"

]

},

{

"schema": "firstname",

"values": [

"Giuseppe"

]

}

],

"derAttrs": [

{

"schema": "fullname",

"values": [

"Giuseppe Verdi"

]

}

]

}

{

"key": "1417acbe-cbf6-4277-9372-e75e04f97000",

"type": "USER",

"realm": "/",

"username": "rossini",

"auxClasses": [ "member" ],

"plainAttrs": [

{

"schema": "surname",

"values": [

"Rossini"

]

},

{

"schema": "firstname",

"values": [

"Gioacchino"

]

},

{

"schema": "email",

"values": [

"gioacchino.rossini@syncope.apache.org"

]

}

],

"derAttrs": [

{

"schema": "fullname",

"values": [

"Gioacchino Rossini"

]

}

],

"virAttrs": [

{

"schema": "enrollment",

"values": [

"154322"

]

}

]

}3.2.4. RelationshipType

Relationships allow the creation of a link between a user and an any object, or between two Any Objects; relationship types define the available link types.

The following any object of type PRINTER contains a relationship of type neighbourhood with another PRINTER

(details are simplified to increase readability):

{

"key": "fc6dbc3a-6c07-4965-8781-921e7401a4a5",

"type": "PRINTER",

"realm": "/",

"name": "HP LJ 1300n",

"auxClasses": [],

"plainAttrs": [

{

"schema": "model",

"values": [

"Canon MFC8030"

]

},

{

"schema": "location",

"values": [

"1st floor"

]

}

],

"relationships": [

{

"type": "neighbourhood",

"rightType": "PRINTER",

"rightKey": "8559d14d-58c2-46eb-a2d4-a7d35161e8f8"

}

]

}3.2.5. Type Extensions

When a user (or an any object) is part of a group, a membership is defined.

It is sometimes useful to define attributes which are bound to a particular membership: if, for example, the

University A and University B Groups are available, a student might have different e-mail addresses for each

university. How can this be modeled?

Type extensions define a set of classes associated to a group, that can be automatically assigned to a given user (or any object) when becoming a member of such group.

With reference to the sample above (details are simplified to increase readability):

{

"key": "c9b2dec2-00a7-4855-97c0-d854842b4b24",

"type": "USER",

"realm": "/",

"username": "bellini",

"memberships": [

{

"type": "Membership",

"rightType": "GROUP",

"rightKey": "bf825fe1-7320-4a54-bd64-143b5c18ab97",

"groupName": "University A",

"plainAttrs": [

{

"schema": "email",

"values": [

"bellini@university_a.net"

]

}

]

},

{

"type": "Membership",

"rightType": "GROUP",

"rightKey": "bf825fe1-7320-4a54-bd64-143b5c18ab96",

"groupName": "University B",

"plainAttrs": [

{

"schema": "email",

"values": [

"bellini@university_b.net"

]

}

]

}

]

}3.3. External Resources

3.3.1. Connector Bundles

Several Connector Bundles come included with Apache Syncope:

More Connector Bundles can be installed, if needed.

3.3.2. Connector Instance details

When defining a connector instance, the following information must be provided:

-

administration realm - the Realm under which administrators need to own entitlements in order to be allowed to manage this connector and all related external resources

-

connector bundle - one of the several already available, or some to be made from scratch, in order to fulfill specific requirements

-

pooling information

-

configuration - depending on the selected bundle, these are properties with configuration values: for example, with LDAP this means host, port, bind DN, object classes while with DBMS it would be JDBC URL, table name, etc.

-

capabilities - define what operations are allowed on this connector: during provisioning, if a certain operation is invoked but the corresponding capability is not set on the related connector instance, no actual action is performed on the underlying connector; the capabilities are:

-

AUTHENTICATE- consent to pass-through authentication -

CREATE- create objects on the underlying connector -

UPDATE- update objects on the underlying connector -

DELETE- delete objects on the underlying connector -

SEARCH- search / read objects from the underlying connector; used during pull withFULL RECONCILIATIONorFILTERED RECONCILIATIONmode -

SYNC- synchronize objects from the underlying connector; used during pull withINCREMENTALmode

-

|

Configuration and capability override

Capabilities and individual configuration properties can be set for override: in this case, all the external resources using the given connector instance will have the chance to override some configuration values, or the capabilities set. This can be useful when the same connector instance is shared among different resources, with little difference in the required configuration or capabilities. |

3.3.3. External Resource details

Given a selected connector instance, the following information is required to define an external resource:

-

priority - integer value, in use by the default propagation task executor

-

propagation actions - which actions shall be executed during propagation

-

trace levels - control how much tracing (including logs and execution details) shall be carried over during propagation, pull and push

-

configuration - see above

-

capabilities - see above

-

account policy - which account policy to enforce on Users, Groups and Any Objects assigned to this external resource

-

password policy - which password policy to enforce on Users, Groups and Any Objects assigned to this external resource

-

pull policy - which pull policy to apply during pull on this external resource

-

push policy - which push policy to apply during push on this external resource

3.3.4. Mapping

The mapping between internal and external data is of crucial importance when configuring an external resource. Such information, in fact, plays a key role for provisioning.

For each of the any types supported by the underlying connector, a different mapping is provided.

A mapping is essentially a collection of mapping items describing the correspondence between an user / group / any object attribute and its counterpart on the Identity Store represented by the current external resource. Each item specifies:

-

internal attribute - the schema acting as the source or destination of provisioning operations; it must be specified by an expression matching one of the following models:

-

schema- resolves to the attribute for the givenschema, owned by the mapped entity (user, group, any object) -

groups[groupName].schema- resolves to the attribute for the givenschema, owned by the group with namegroupName, if a membership for the mapped entity exists -

users[userName].schema- resolves to the attribute for the givenschema, owned by the user with nameuserName, if a relationship with the mapped entity exists -

anyObjects[anyObjectName].schema- resolves to the attribute for the givenschema, owned by the any object with nameanyObjectName, if a relationship with the mapped entity exists -

relationships[relationshipType][relationshipAnyType].schema- resolves to the attribute for the givenschema, owned by the any object of typerelationshipAnyType, if a relationship of typerelationshipTypewith the mapped entity exists -

memberships[groupName].schema- resolves to the attribute for the givenschema, owned by the membership for groupgroupNameof the mapped entity (user, any object), if such a membership exists -

privileges[applicationKey]- resolves to the list of privileges related to the given application, owned by the mapped entity (which can only be user, in this case)

-

-

external attribute - the name of the attribute on the Identity Store

-

transformers - JEXL expression or Java class implementing ItemTransformer ; the purpose is to transform values before they are sent to or received from the underlying connector

-

mandatory condition - JEXL expression indicating whether values for this mapping item must be necessarily available or not; compared to a simple boolean value, such condition allows complex statements to be expressed such as 'be mandatory only if this other attribute value is above 14', and so on

-

remote key flag - should this item be considered as the key value on the Identity Store, if no pull or push correlation rules are applicable?

-

password flag (Users only) - should this item be treated as the password value?

-

purpose - should this item be considered for propagation / push, pull, both or none?

Besides the items documented above, some more data needs to be specified for a complete mapping:

-

which object class shall be used during communication with the Identity Store; predefined are

__ACCOUNT__for Users and__GROUP__for Groups -

whether matches between user / group / any object’s attribute values and their counterparts on the Identity Store should be performed in a case-sensitive fashion or not

-

which schema shall be used to hold values for identifiers generated upon create by the Identity Store - required by some cloud providers not accepting provided values as unique references

-

the model for generating the DN (distinguished name) values - only required by some connector bundles as LDAP and Active Directory

The following mapping item binds the mandatory internal name schema with the external attribute cn for both

propagation / push and pull.

{

"key": "a2bf43c8-74cb-4250-92cf-fb8889409ac1",

"intAttrName": "name",

"extAttrName": "cn",

"connObjectKey": true,

"password": false,

"mandatoryCondition": "true",

"purpose": "BOTH"

}The following mapping item binds the optional internal aLong schema for the membership of the additional group

with the external attribute age for propagation / push only; in addition, it specifies a JEXL expression which appends .0

to the selected aLong value before sending it out to the underlying connector.

{

"key": "9dde8bd5-f158-499e-9d81-3d7fcf9ea1e8",

"intAttrName": "memberships[additional].aLong",

"extAttrName": "age",

"connObjectKey": false,

"password": false,

"mandatoryCondition": "false",

"purpose": "PROPAGATION",

"propagationJEXLTransformer": "value + '.0'"

}|

Object link and Realms hierarchy

When Object link is applicable - typically with LDAP or Active Directory, as said - the need may arise to map the Realms hierarchy into nested structures, as Organizational Units. In such cases, the following JEXL expressions can be set for Object link (assuming

|

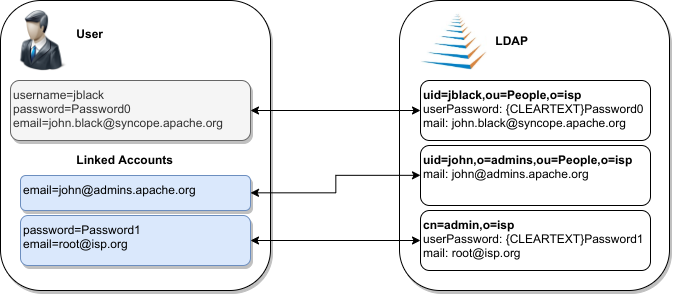

3.3.5. Linked Accounts

Sometimes the information provided by the mapping is not enough to define a one-to-one correspondence between Users / Groups / Any Objects and objects on External Resources.

There can be many reasons for this situation, including existence of so-called service accounts (typical with LDAP or Active Directory), or simply the uncomfortable reality that system integrators keep encountering when legacy systems are to be enrolled into a brand new IAM system.

Users can have, on a given External Resource with USER mapping defined:

-

zero or one mapped account

if the External Resource is assigned either directly or via Group membership. -

zero or more linked accounts

as internal representation of objects on the External Resource, defined in terms of username, password and / or plain attribute values override, with reference to the owning User.

Linked Accounts are propagated alongside with owning User - following the existing push correation rule if available - and pulled according to the given pull correation rule, if present.

3.4. Realms

Realms define a hierarchical security domain tree, primarily meant for containing Users, Groups and Any Objects.

Each realm:

-

has a unique name and a parent realm - except for the pre-defined root realm, which is named

/; -

is either a leaf or root of a sub-tree of realms;

-

is uniquely identified by the path from the root realm, e.g.

/a/b/cidentifies the sub-realmcin the sub-tree rooted atb, having in turnaas parent realm, directly under the root realm; -

optionally refers to account and password policies: such policies are enforced on all Users, Groups and Any Objects in the given realm and sub-realms, unless some sub-realms define their own policies.

If Users, Groups and Any Objects are members of a realm then they are also members of the parent realm: as a result, the root realm contains everything, and other realms can be seen as containers that split up the total number of entities into smaller pools.

This partition allows fine-grained control over policy enforcement and, alongside with entitlements and roles, helps to implement delegated administration.

|

Logic Templates

As with pull it is also possible to add templates to a realm. The values specified in the template are applied to entities belonging to that realm, hence this can be used as a mechanism for setting default values for attributes or external resources on entities. Logic Templates apply to all operations passing through the logic layer, e.g. triggered by REST requests. |

3.4.1. Realm Provisioning

Provisioning can be enabled for realms: mapping information can be provided so that realms are considered during propagation, pull and push execution.

A typical use case for realm provisioning is to model an organization-like structure on Identity Stores, as with LDAP and Active Directory.

3.4.2. LogicActions

When Users, Groups or Any Objects get created, updated or deleted in a realm, custom logic can be invoked by associating the given Realm with one or more implementations of the LogicActions interface.

| LogicActions apply to all operations passing through the logic layer, e.g. triggered by REST requests. |

3.5. Entitlements

Entitlements are basically strings describing the right to perform an operation on Syncope.

The components in the logic layer are annotated with

Spring Security to implement declarative security; in the following

code snippet taken from

RealmLogic

, the

hasRole expression

is used together with one of the standard entitlements to restrict access only to Users owning the REALM_SEARCH

entitlement.

@PreAuthorize("hasRole('" + IdRepoEntitlement.REALM_SEARCH + "')")

public List<RealmTO> list(final String fullPath) {Entitlements are granted via roles to Users, scoped under certain realms, thus allowing delegated administration.

|

The set of available entitlements is statically defined - even though extensions have the ability to enlarge the initial list : this is because entitlements are the pillars of the internal security model and are not meant for external usage. If you need to model the rights that Users own on external applications, look at privileges, instead. |

3.6. Privileges

Privileges model the rights that Users own on external applications.

Privileges are granted via roles to Users.

| The typical use case for privileges is to use Syncope as registry for available applications and privileges that users can own on those; once done this, external tools can query Syncope about the privileges owned by given users. |

3.7. Roles

Roles map a set of entitlements to a set of realms and / or dynamic realms.

In addition, Roles can be used to assign privileges to Users.

|

Static and Dynamic Memberships

Users are statically assigned to roles when assignments are explicitly set. However, a condition can be expressed in the role definition so that all matching Users are dynamic members of the role. |

3.7.1. Delegated Administration

The idea is that any user U assigned to a role R, which provides entitlements E1…En for realms Re1…Rem, can exercise Ei on entities (Users, Groups, Any Objects of given types - depending on Ei - or Connector Instances and External Resources) under any Rej or related sub-realms.

Moreover, any user U assigned to a role R, which provides entitlements E1…En for dynamic realms DR1..DRn, can exercise Ei on entities (Users, Groups, Any Objects of given types, depending on Ei) matching the conditions defined for any DRk.

|

Dynamic Realms limitations

Users to whom administration rights were granted via Dynamic Realms can only update Users, Groups and Any Objects,

not create nor delete. |

Let’s suppose that we want to implement the following scenario:

As by default, Apache Syncope will have defined the following entitlements, among others:

-

USER_CREATE -

USER_UPDATE -

GROUP_UPDATE

Hence, here is how entitlements should be assigned (via roles) to administrators in order to implement the scenario above:

-

Administrator A:

USER_CREATEon R5 -

Administrator B:

USER_UPDATEon R6 and R8 -

Administrator C:

GROUP_UPDATEon R8

|

Delegated Administration via Admin Console

When administering via REST, the entitlements to be granted to delegated administrators are straightforward:

When using the Admin Console, instead, more entitlements are generally required: this because the underlying

implementation takes care of simplifying the UX as much as possible.

|

Group Ownership

Groups can designate a User or another Group as owner.

The practical consequence of this setting is that Users owning a Group (either because they are directly set as owners or members of the owning Group) is that they are entitled to

-

perform all operations (create, update, delete, …) on the owned Group

-

perform all operations (create, update, delete, …) on all User and Any Object members of the owner Group, with exception of removing members from the Group itself

regardless of the Realm.

The actual Entitlements are assigned through the predefined GROUP_OWNER Role:

-

USER_SEARCH -

USER_READ -

USER_CREATE -

USER_UPDATE -

USER_DELETE -

ANYTYPECLASS_READ -

ANYTYPE_LIST -

ANYTYPECLASS_LIST -

RELATIONSHIPTYPE_LIST -

ANYTYPE_READ -

REALM_SEARCH -

GROUP_SEARCH -

GROUP_READ -

GROUP_UPDATE -

GROUP_DELETE

The GROUP_OWNER Role can be updated to adjust the set of assigned Entitlements.

3.7.2. Delegation

With Delegation, any user can delegate other users to perform operations on their behalf.

In order to set up a Delegation, the following information shall be provided:

-

delegating User (mandatory) - administrators granted with

DELEGATION_CREATEEntitlement can create Delegations for all defined Users; otherwise, the only accepted value is the User itself; -

delegated User (mandatory) - any User defined, distinct from delegating;

-

start (mandatory) - initial timestamp from which the Delegation is considered effective;

-

end (optional) - final timestamp after which the Delegation is not considered effective: when not provided, Delegation will remain valid unless deleted;

-

roles (optional) - set of Roles granted by delegating to delegated User: only Roles owned by delegating can be granted, when not provided all owned Roles are considered as part of the Delegation.

| Audit entries generated when operating under Delegation will report both delegating and delegated users. |

3.8. Provisioning

As described above, provisioning is actually the core feature provided by Apache Syncope.

Essentially, it can be seen as the process of keeping the identity data synchronized between Syncope and related external resources, according to the specifications provided by the mapping. It does this by performing create, update and delete operations onto the internal storage or external resources via connectors.

3.8.1. Overview

The picture below contains an expanded view of the core architecture, with particular reference to the components involved in the provisioning process.

The provisioning operations can be initiated in several different ways:

-

by creating, updating or deleting Users, Groups or Any Objects via REST (thus involving the underlying logic layer)

-

by requesting execution of pull or push tasks via REST

-

by triggering periodic pull or push task executions

|

Provisioning Managers

The provisioning operations are defined by the provisioning manager interfaces: Default implementations are available: |

3.8.2. Propagation

Whenever a change is performed via REST on Users, Groups or Any Objects:

-

a set of propagation tasks is generated, one for each associated external resource;

-

the generated propagation tasks are executed, e.g. the corresponding operations (create, update or delete) are sent out, via connectors, to the configured Identity Stores; the tasks can be saved for later re-execution.

|

Which external resources?

Depending on the entity being created / updated / deleted, different external resources are taken into account by the propagation process:

|

|

Adequate capabilities to Connectors and External Resources

Ensure to provide an adequate set of capabilities to underlying Connectors and

External Resources for the actual operations to perform, otherwise the Propagation Tasks will report |

|

Propagate password values

Password values are kept in the internal storage according to the During propagation, Syncope fetches all data of the given User, then prepares the attributes to propagate, according to the provided mapping; password has a special treatment:

Password values are always sent to External Resources wrapped as ConnId GuardedString objects. |

By default, the propagation process is controlled by the PriorityPropagationTaskExecutor, which implements the following logic:

-

sort the tasks according to the related resource’s priority, then execute sequentially

-

tasks for resources with no priority are executed afterwards, concurrently

-

the execution of a given set of tasks is halted (and global failure is reported) whenever the first sequential task fails

-

status and eventual error message (in case of no resource priority) can be saved for reporting, in the case where the related external resource was configured with adequate tracing

-

minimize the set of operations to be actually performed onto the Identity Store by attempting to read the external object corresponding to the internal entity and comparing with the modifications provided

|

Create or update?

The minimization performed by

|

Different implementations of the PropagationTaskExecutor interface can be provided, in case the required behavior does not fit into the provided implementation.

PropagationActions

The propagation process can be decorated with custom logic to be invoked around task execution, by associating external resources to one or more implementations of the PropagationActions interface.

Some examples are included by default, see table below.

Required for setup of an External Resource based on the ConnId Azure connector bundle. |

|

If no password value was already provided in the propagation task, sends out the internal password hash value to DBMS;

the cipher algorithm associated with the password must match the value of |

|

If no password value was already provided in the propagation task, random password value is generated according to the defined password policy and sent to the External Resource. |

|

Required for setup of an External Resource based on the ConnId GoogleApps connector bundle. |

|

If a User is associated with a Group in Syncope, keep the corresponding User as a member of the corresponding Group in LDAP or AD. |

|

If no password value was already provided in the propagation task, sends out the internal password hash value to LDAP;

the cipher algorithm associated with the password must match the value of |

3.8.3. Pull

Pull is the mechanism used to acquire identity data from Identity Stores; for each external resource, one or more pull tasks can be defined, run and scheduled for period execution.

Pull task execution involves querying the external resource for all mapped any types, sorted according to the order defined by a custom implementation of ProvisionSorter or its default implementation DefaultProvisionSorter .

Each entity is then processed in an isolated transaction; a retrieved entity can be:

-

matching if a corresponding internal entity was found, according to the mapping of - or the pull policy set for, if present - the enclosing external resource;

-

unmatching otherwise.

Once this has been assessed, entities are processed according to the matching / unmatching rules specified for the pull task: by default, unmatching entities get created internally, and matching entities are updated.

|

Pull Mode

The Identity Store can be queried in different ways, depending on the pull mode that is specified: |

|

Pull Templates

As the values specified in the template are applied to pulled entities, this can be used as mechanism for setting default values for attributes or external resources on entities. A typical use case is, when pulling Users from the external resource |

PullActions

The pull process can be decorated with custom logic to be invoked around task execution, by associating pull tasks to one or more implementations of the PullActions interface.

Some examples are included by default, see the table below.

If a User is associated with a Group in AD, keep the corresponding User as a member of the corresponding Group in Syncope. |

|

If a User is associated with a Group in LDAP, keep the corresponding User as a member of the corresponding Group in Syncope. |

|

Import hashed password values from LDAP;

the cipher algorithm associated with the password must match the value of |

|

Import hashed password values from DBMS;

the cipher algorithm associated with the password must match the value of |

Remediation

Errors during pull might arise for various reasons: values might not be provided for all mandatory attributes or fail the configured validation, delete User as consequence of an incremental change’s processing might be blocked because such User is configured as Group owner, and so on.

When Remediation is enabled for a certain Pull Task, execution errors are reported to administrators, which are given the chance to examine and possibly fix, or just discard.

3.8.4. Push

With push, the matching set of internal entities can be sent to Identity Stores - mainly for (re)initialization purposes; for each external resource, one or more push tasks can be defined, run and scheduled for period execution.

Push task execution involves querying the internal storage for all mapped any types, sorted according to the order defined by a custom implementation of ProvisionSorter or its default implementation DefaultProvisionSorter .

Each entity is then processed in an isolated transaction; an internal entity can be:

-

matching if a corresponding remote entity was found, according to the push policy set for the enclosing external resource;

-

unmatching otherwise.

Once this has been assessed, entities are processed according to the matching / unmatching rules specified for the push task: by default, unmatching entities are pushed to Identity Stores, and matching entities are updated.

PushActions

The push process can be decorated with custom logic to be invoked around task execution, by associating push tasks to one or more implementations of the PushActions interface.

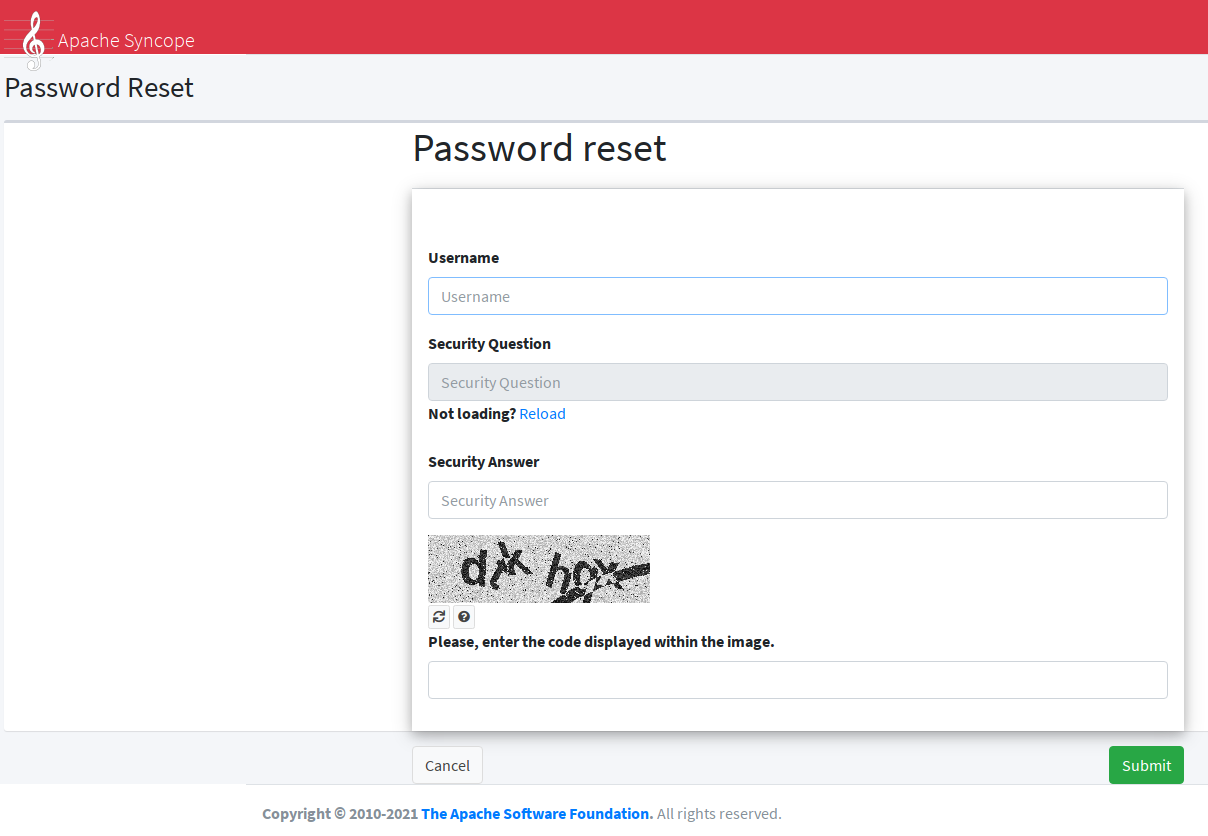

3.8.5. Password Reset

When users lost their password, a feature is available to help gaining back access to Apache Syncope: password reset.

The process can be outlined as follows:

-

user asks for password reset, typically via end-user

-

user is asked to provide an answer to the security question that was selected during self-registration or self-update

-

if the expected answer is provided, a unique token with time-constrained validity is internally generated and an e-mail is sent to the configured address for the user with a link - again, typically to the end-user - containing such token value

-

user clicks on the received link and provides new password value, typically via end-user

-

user receives confirmation via e-mail

|

The outlined procedure requires a working e-mail configuration. In particular:

|

|

The process above requires the availability of security questions that users can pick up and provide answers for. The usage of security questions can be however disabled by setting the |

|

Once provided via Enduser Application, the answers to security questions are never reported, neither via REST or Admin UI to administrators, nor to end-users via Enduser Application. This to avoid any information disclosure which can potentially lead attackers to reset other users' passwords. |

| In addition to the password reset feature, administrators can set a flag on a given user so that he / she is forced to update their password value at next login. |

3.9. Policies

Policies control different aspects. They can be used to fine-tune and adapt the overall mechanisms to the particularities of the specific domain in which a given Apache Syncope deployment is running.

|

Policy Composition

When defining policies and associating them with different realms and resources, it is common to observe that several policies of the same type have to be enforced on the same user, group or any object. In such cases, Apache Syncope transparently composes all of the candidate policies and obtains a single applicable policy which contains all the conditions of the composing policies; this process, however, is not guaranteed to be successful, as different policies of the same type might provide conflicting clauses. |

3.9.1. Account

Account policies allow the imposition of constraints on username values, and are involved in the authentication process.

|

When set for realm R, an account policy is enforced on all Users of R and sub-realms. When set for resource R, an account policy is enforced on all Users that have R assigned. |

When defining an account policy, the following information must be provided:

-

max authentication attempts - how many times Users are allowed to fail authentication before getting suspended

-

propagate suspension - when suspended as a consequence of too many authentication failures, should Users also be suspended on associated resources or not?

-

pass-through resources - which external resources are involved with pass-through authentication

-

rules - set of account rules to evaluate with the current policy

Account Rules

Account rules define constraints to apply to username values.

Some implementations are provided out-of-the-box, custom ones can be provided on given deployment.

|

As

As |

Default Account Rule

The default account rule (enforced by DefaultAccountRule and configurable via DefaultAccountRuleConf ) contains the following controls:

-

maximum length - the maximum length to allow;

0means no limit set; -

minimum length - the minimum length to allow;

0means no limit set; -

pattern - Java regular expression pattern to match;

NULLmeans no match is attempted; -

all uppercase - are lowercase characters allowed?

-

all lowercase - are uppercase characters allowed?

-

words not permitted - list of words that cannot be present, even as a substring;

-

schemas not permitted - list of schemas whose values cannot be present, even as a substring;

-

prefixes not permitted - list of strings that cannot be present as a prefix;

-

suffixes not permitted - list of strings that cannot be present as a suffix.

Before being able to configure the default account rule as mentioned above, you will need to first create a JAVA

ACCOUNT_RULE implementation for the org.apache.syncope.common.lib.policy.DefaultAccountRuleConf

class.

|

Pass-through Authentication

During user authentication, if the resulting applicable account policy defines pass-through

resources, the provided credentials are verified first against the internal storage, then against each configured

external resource (provided that the underlying connector instance has the AUTHENTICATE

capability set): the first check that succeeds will successfully authenticate the user.

This feature allows, for example, to reuse credentials contained in Identity Stores (without extracting them), instead of storing password values in the internal storage. It also facilitates implementing authentication chains.

3.9.2. Password

Password policies allow the imposition of constraints on password values.

|

When set for realm R, a password policy is enforced on all Users of R and sub-realms. When set for resource R, a password policy is enforced on all Users that have R assigned. |

When defining a password policy, the following information must be provided:

-

allow null password - whether a password is mandatory for Users or not

-

history length - how many values shall be considered in the history

-

rules - set of password rules to evaluate with the current policy

Password Rules

Password rules define constraints to apply to password values.

Some implementations are provided out-of-the-box, custom ones can be provided on given deployment.

|

As

As |

Default Password Rule

The default password rule (enforced by DefaultPasswordRule and configurable via DefaultPasswordRuleConf ) is based on Passay and contains the following controls:

-

maximum length - the maximum length to allow (

0means no limit set); -

minimum length - the minimum length to allow (

0means no limit set); -

alphabetical - the number of alphabetical characters required;

-

uppercase - the number of uppercase characters required;

-

lowercase - the number of lowercase characters required;

-

digit - the number of digits required;

-

special - the number of special characters required;

-

special chars - the set of special characters allowed;

-

illegal chars - the set of characters not allowed;

-

repeat same - the size of the longest sequence of repeating characters allowed;

-

username allowed - whether a username value can be used;

-

words not permitted - list of words that cannot be present, even as a substring;

-

schemas not permitted - list of schemas whose values cannot be present, even as a substring;

| The default password rule can be extended to cover specific needs, relying on the whole set of features provided by Passay. |

Before being able to configure the default password rule as mentioned above, you will need to first create a JAVA

PASSWORD_RULE implementation for the org.apache.syncope.common.lib.policy.DefaultPasswordRuleConf

class.

|

"Have I Been Pwned?" Password Rule

This password rule (enforced by HaveIBeenPwnedPasswordRule and configurable via HaveIBeenPwnedPasswordRuleConf ) checks the provided password values against the popular "Have I Been Pwned?" service.

Before being able to configure the "Have I Been Pwned?" password rule as mentioned above, you will need to first create

a JAVA PASSWORD_RULE implementation for the

org.apache.syncope.common.lib.policy.HaveIBeenPwnedPasswordRuleConf class.

|

3.9.3. Access

Access policies provide fine-grained control over the access rules to apply to client applications.

The following access policy configurations are available by default:

It describes whether the client application is allowed to use WA, allowed to participate in single sign-on authentication, etc; additionally, it may be configured to require a certain set of principal attributes that must exist before access can be granted. |

|

Make access decisions based on HTTP request properties as client IP address and user-agent. |

|

Delegate access decisions to a remote endpoint by receiving the authenticated principal as url parameter of a |

|

Access is only allowed within the configured timeframe. |

| Access Policy instances are dynamically translated into CAS Service Access Strategy. |

3.9.4. Attribute Release

Attribute Release policies decide how attributes are selected and provided to a given

client application in the final WA response.

Additionally, each instance has the ability to apply an optional filter to weed out their attributes based on their

values.

| Attribute Release Policy instances are dynamically translated into CAS Attribute Release Policy. |

3.9.5. Authentication

WA presents a number of strategies for handling authentication security policies, based on the defined

authentication modules.

Authentication Policies in general control the following:

-

Should the authentication chain be stopped after a certain kind of authentication failure?

-

Given multiple authentication handlers in a chain, what constitutes a successful authentication event?

Authentication Policies are typically activated after:

-

An authentication failure has occurred.

-

The authentication chain has finished execution.

Typical use cases of authentication policies may include:

-

Enforce a specific authentication module’s successful execution, for the entire authentication event to be considered successful.

-

Ensure a specific class of failure is not evident in the authentication chain’s execution log.

-

Ensure that all authentication modules in the chain are executed successfully, for the entire authentication event to be considered successful.

| Authentication Policy instances are dynamically translated into CAS Authentication Policy. |

3.9.6. Propagation

Propagation policies are evaluated during the execution of propagation tasks and are meant to tweak the propagation process by setting the pre-fetch option or letting Syncope to retry the configured operations in case of failures.

When defining a propagation policy, the following information must be provided:

-

fetch around provisioning - the default behavior is to attempt to read upfront the object being propagated (to ensure it exists or not, depending on the actual operation scheduled to perform) and to read it again afterwards (to check the effective results); this can be disabled

-

update delta - in case of update, all object attributes are propagated by default; when enabled, only the changed attributes will be instead propagated

-

max number of attempts

-

back-off strategy

-

FIXED- pauses for a fixed period of time before continuing -

EXPONENTIAL- increases the back off period for each retry attempt in a given set up to a limit -

RANDOM- chooses a random multiple of the interval that would come from a simple deterministic exponential

-

3.9.7. Pull

Pull policies are evaluated during the execution of pull tasks and are meant to:

-

help match existing Users, Groups and Any Objects during pull, thus generating update events (rather than create)

-

determine which action shall be taken in case such match is not unique (e.g. what to do if the same external account can be mapped to two distinct Users in Apache Syncope?)

|

When set for resource R, a pull policy is enforced on all Users, Groups and Any Objects pulled from R. |

When defining a pull policy, the following information must be provided:

-

conflict resolution action

-

IGNORE- do nothing -

FIRSTMATCH- pull first matching object only -

LASTMATCH- pull last matching object only -

ALL- pull all matching objects

-

-

rules - set of correlation rules to evaluate with the current policy; for each defined any type, a different rule is required

Pull Correlation Rules

Pull correlation rules define how to match objects received from External Resources with existing Users (including Linked Accounts), Groups or Any Objects.

The default implementation attempts to match entities on the basis of the values of the provided plain attributes, according to the available mapping.

|

Custom pull correlation rules can be provided by implementing the PullCorrelationRule interface. |

3.9.8. Push

Push policies are evaluated during the execution of push tasks.

|

When set for resource R, a push policy is enforced on all Users, Groups and Any Objects pushed to R. |

Push Correlation Rules

Push correlation rules define how to match Users (including Linked Accounts), Groups or Any Objects with objects existing on External Resources.

The default implementation attempts to match entities on the basis of the values of the provided plain attributes, according to the available mapping.

|

Custom push correlation rules can be provided by implementing the PushCorrelationRule interface. |

3.9.9. Ticket Expiration

Ticket Expiration policies control the duration of various types of WA sessions.

| Ticket Expiration Policy instances are dynamically translated into their CAS equivalent. |

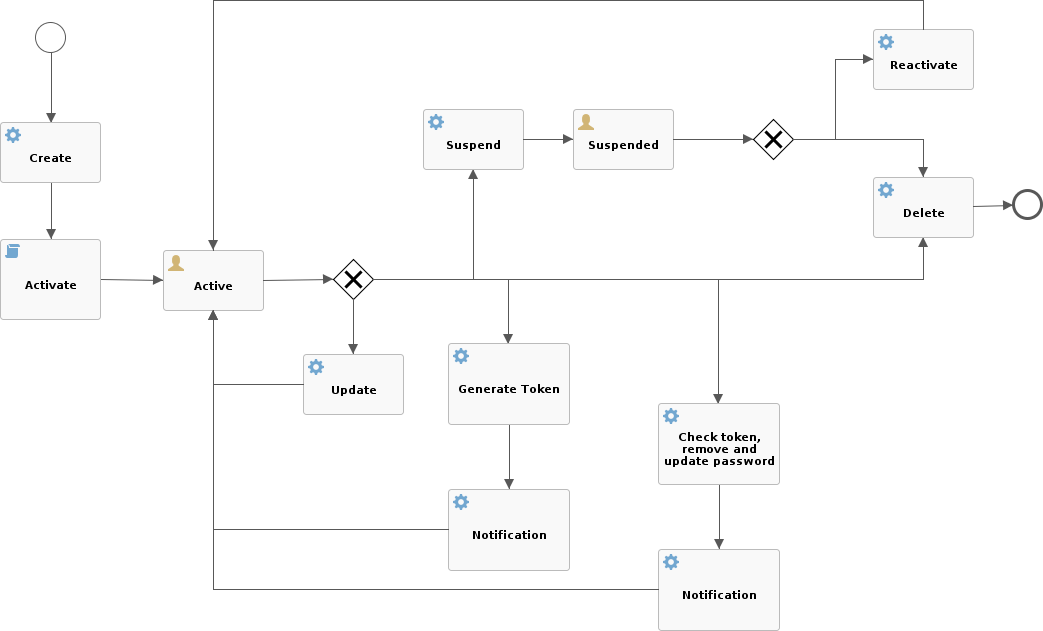

3.10. Workflow

Workflow manages the internal identity lifecycle by defining statuses and transitions that every user, group or any object in Apache Syncope will traverse. A workflow instance is started once identities get created, and shut down when they are removed.

Workflow is triggered during the provisioning process as the first step in creating, updating or deleting identities into the internal storage.

|

Workflow Adapters

The workflow features are defined by the workflow adapter interfaces: Default implementations are available: |

|

Which workflow adapter for users?

|

3.10.1. Flowable User Workflow Adapter

An advanced adapter is provided for Users, based on Flowable, one of reference open source BPMN 2.0 implementations.

The FlowableUserWorkflowAdapter is bootstrapped from userWorkflow.bpmn20.xml and presents several advantages and more features, if compared to the default user adapter:

-

Besides mandatory statuses, which are modeled as BPMN

userTaskinstances, more can be freely added at runtime, provided that adequate transitions and conditions are also inserted; more details about available BPMN constructs are available in the Flowable User Guide.

Additional statuses and transitions allow the internal processes of Apache Syncope to better adapt to suit organizational flows. -

Custom logic can be injected into the workflow process by providing BPMN

serviceTaskinstances. -

Flowable forms are used for implementing approval.

-

admin console supports web-based graphical modeling of the workflow definition.

Approval

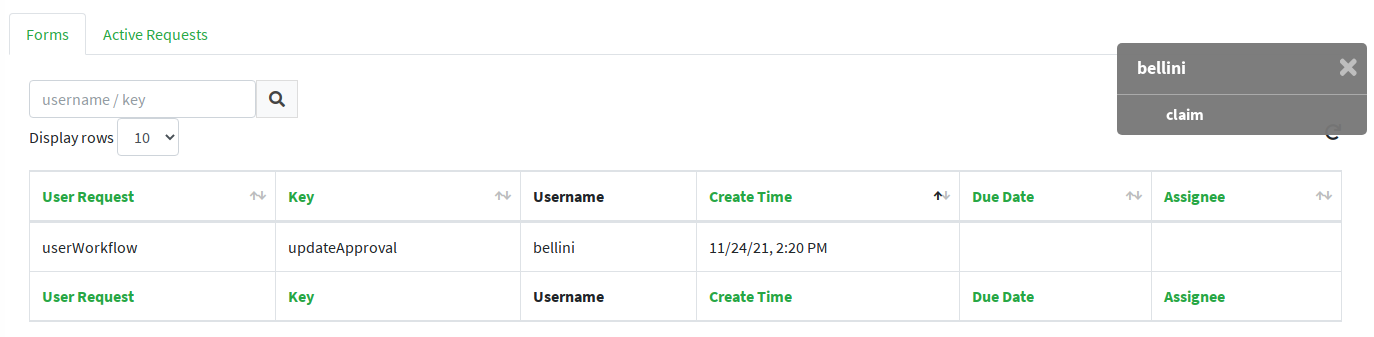

Every transition in the Flowable user workflow definition can be subjected to approval.

The underlying idea is that some kind of self-modifications (group memberships, external resource assignments, …) might not be allowed to 'plain' Users, as there could be conditions which require management approval. Managers could also be asked to complete the information provided before the requested operation is finished.

In order to define an approval form, a dedicated BPMN userTask needs to be defined, following the rules established

for Flowable forms.

|

What is required for administrators to manage approval?

The following conditions must be met, for an User

The special super-user |

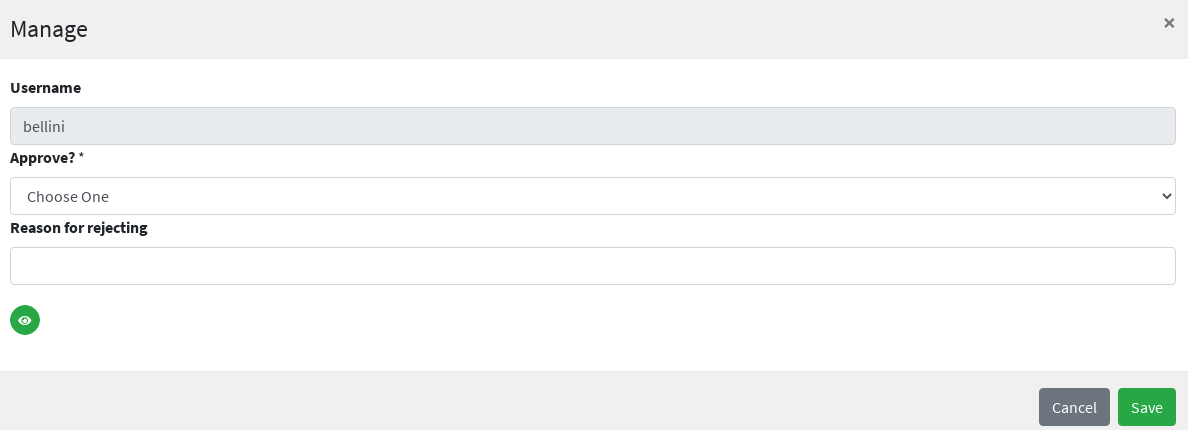

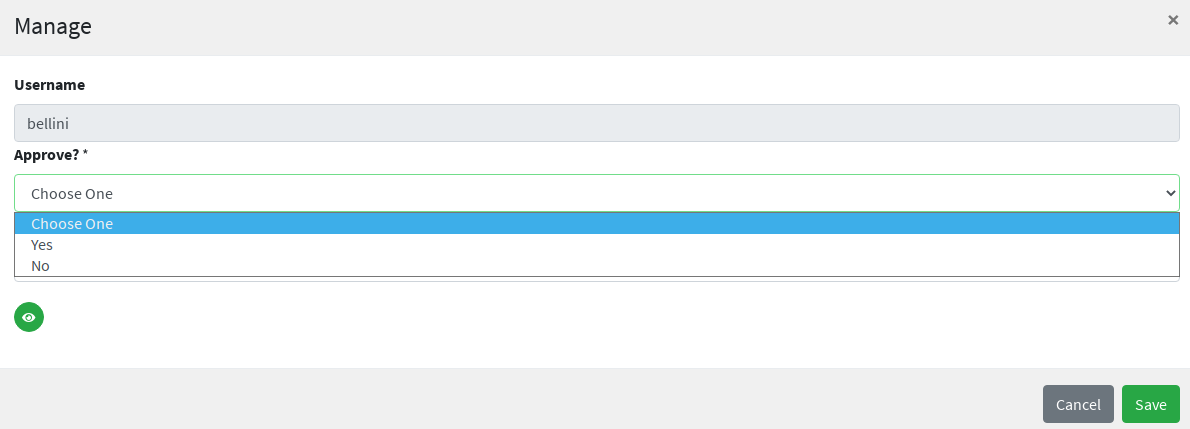

The snippet below shows how to define an approval form in XML; the same operation can be performed via the GUI editor provided by admin console.

<userTask id="createApproval" name="Create approval"

flowable:candidateGroups="managingDirector"

flowable:formKey="createApproval"> (1)

<extensionElements>

<flowable:formProperty id="username" name="Username" type="string"

expression="${userTO.username}" writable="false"/> (2)

<flowable:formProperty id="approve" name="Approve?" type="boolean"

variable="approve" required="true"/> (3)

<flowable:formProperty id="rejectReason" name="Reason for rejecting" type="string"

variable="rejectReason"/>

</extensionElements>

</userTask>| 1 | formKey and id must be unique across the workflow definition, name is displayed by the admin console;

candidateGroups and candidateUsers might be defined, even both, to indicate which Groups or Users should be

managing these approvals; if none are specified, only admin is entitled to manage such approval |

| 2 | expression will be evaluated against the current requesting user (as workflow variable) and related properties;

read-only form input can be defined by setting writable="false" |

| 3 | exporting approval inputs into workflow variables is possible via the variable attribute; required form input can

be defined by setting required="true" |

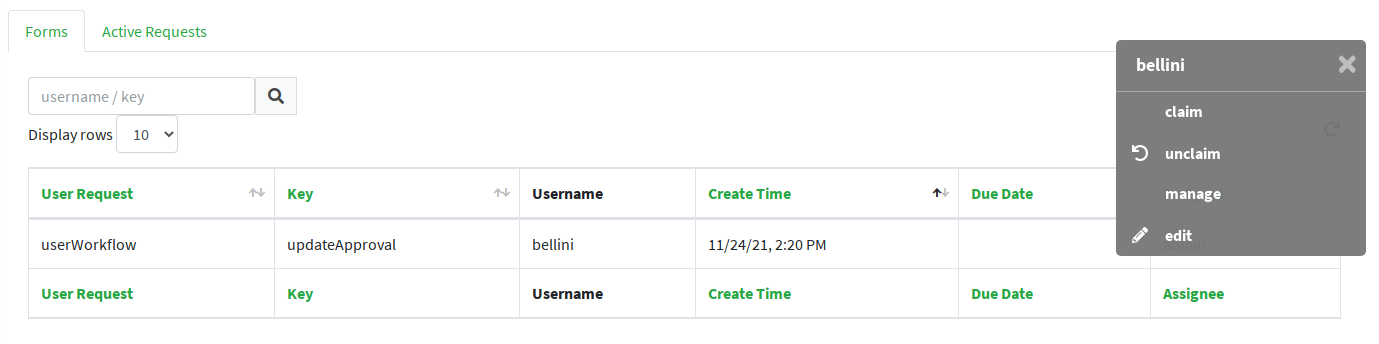

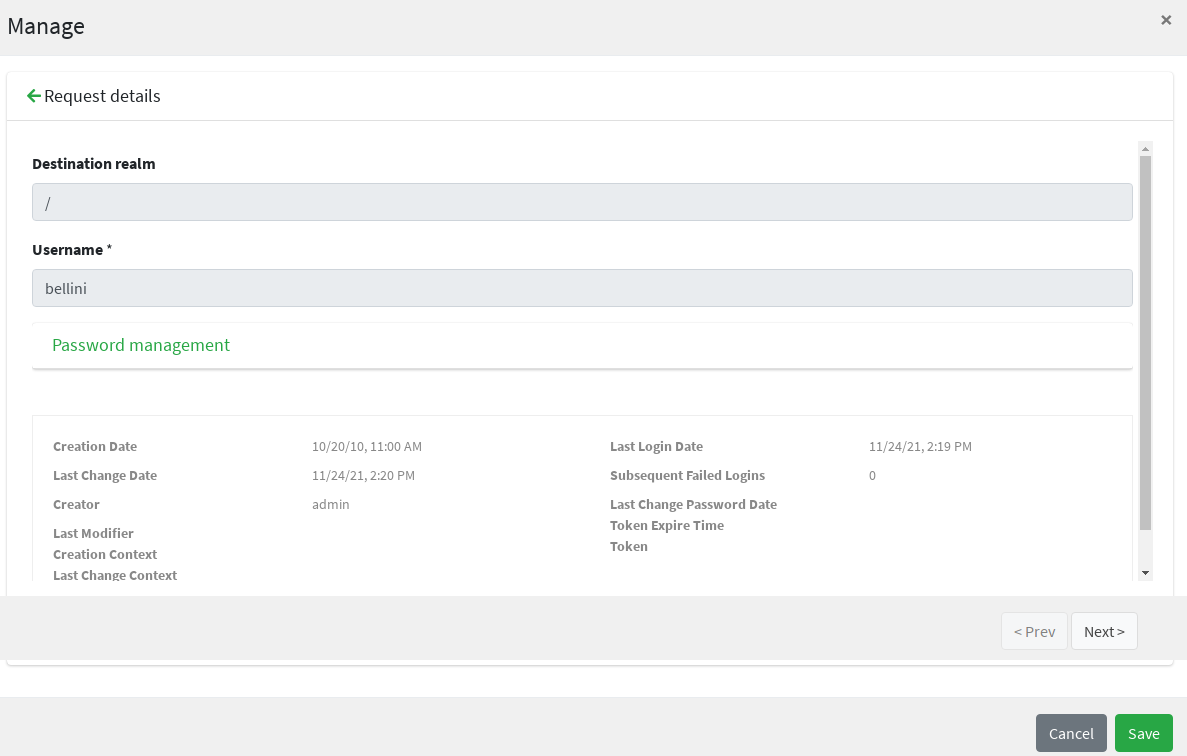

Once the form is defined, any modification subject to that approval will be manageable via the admin console, according to the following flow (the actual operations on the admin console for the sample above are reported below):

-

administrator A sees the new approval notifications

-

administrator A claims the approval and is then allowed to manage it

-

administrator A reviews the updated user, with ongoing modification applied (no actual modification performed yet)

-

administrator A can approve or reject such modification

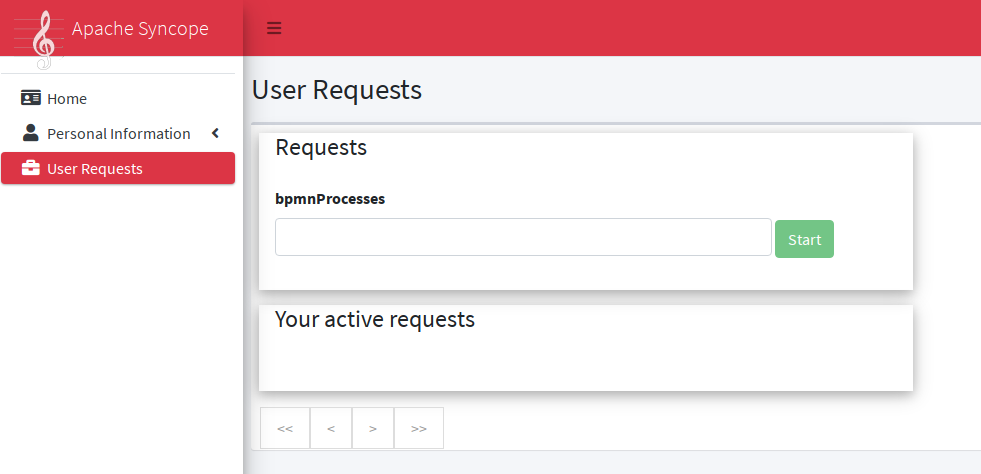

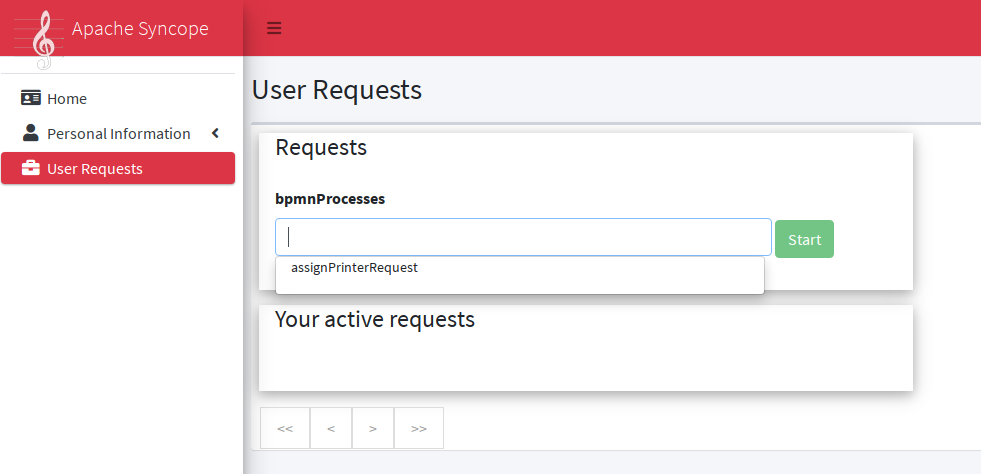

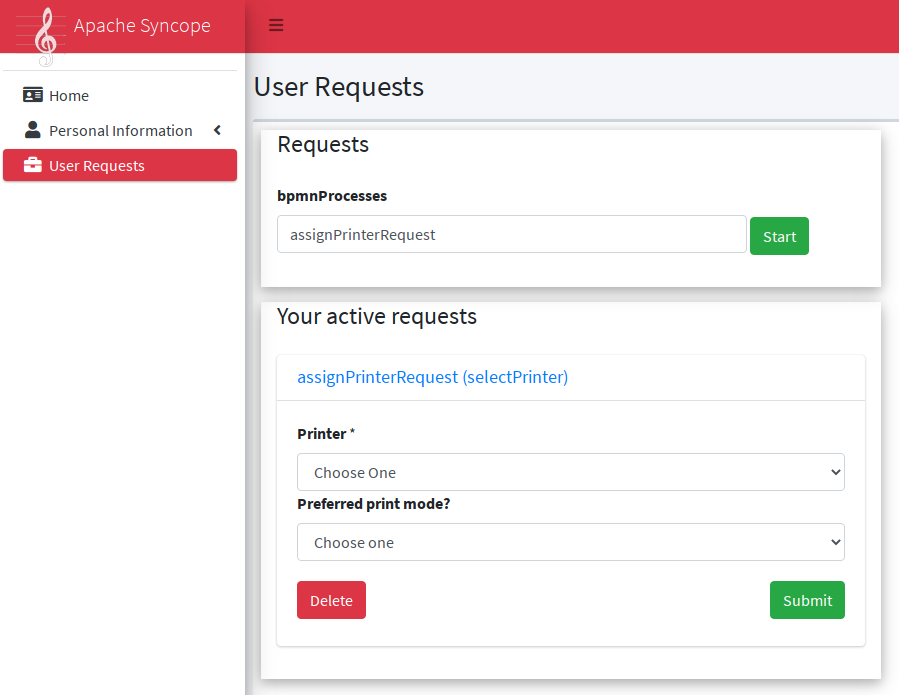

Request Management

Request management is a key-feature of Identity Governance and allows to define and manage, in a structured way,

whatever process intended to update identity attributes, memberships and relationships.

Request examples are "assign mobile phone", "grant groups on AD" or "consent access to application".

Users can initiate whichever request among the ones defined; once initiated, such requests will follow their own path, which might also include one or more approval steps.

The BPMN process below shows how to define an user request in XML; the same operation can be performed via the GUI editor provided by admin console.

In this user request definition:

-

user selects one of printers defined in the system, for self-assignment

-

administrator approves user’s selection

-

a relationship between user and printer is established

<process id="assignPrinterRequest" name="Assign printer" isExecutable="true">

<startEvent id="startevent1" name="Start"/>

<endEvent id="endevent1" name="End"/>

<sequenceFlow id="flow1" sourceRef="startevent1" targetRef="selectPrinter"/>

<userTask id="selectPrinter" name="Select printer" flowable:formKey="selectPrinter"

flowable:assignee="${wfExecutor}"> (1)

<extensionElements>

<flowable:formProperty id="printer" name="Printer"

variable="printer" type="dropdown" required="true"> (2)

<flowable:value id="dropdownValueProvider" name="printersValueProvider"/>

</flowable:formProperty>

<flowable:formProperty id="printMode" name="Preferred print mode?" type="enum">

<flowable:value id="bw" name="Black / White"/>

<flowable:value id="color" name="Color"/>

</flowable:formProperty>

</extensionElements>

</userTask>

<userTask id="approvePrinter" name="Approve printer" flowable:formKey="approvePrinter"> (3)

<extensionElements>

<flowable:formProperty id="username" name="Username" type="string"

expression="${userTO.username}" writable="false"/>

<flowable:formProperty id="printer" name="Selected printer" type="string"

expression="${printer}" writable="false"/>

<flowable:formProperty id="approve" name="Approve?" type="boolean"

variable="approve" required="true"/>

</extensionElements>

</userTask>

<sequenceFlow id="sid-D7047714-8E57-46B8-B6D4-4844DE330329"

sourceRef="selectPrinter" targetRef="approvePrinter"/>

<serviceTask id="createARelationship" name="Create ARelationship"

flowable:delegateExpression="${createARelationship}"/> (4)

<sequenceFlow id="sid-33880AE7-35C6-4A39-8E5B-12D8BA53F042"

sourceRef="approvePrinter" targetRef="createARelationship"/>

<sequenceFlow id="sid-831E1896-EDF9-4F7D-AA42-E86CC1F8C5D3"

sourceRef="createARelationship" targetRef="endevent1"/>

</process>| 1 | the first form defined is self-assigned to the user which has started this request |

| 2 | the dropdown type is a Syncope extension of the form property types supported by Flowable

and allows to inject a list of elements via the dropdownValueProvider value (with name printersValueProvider in this

sample), which must be a Spring bean implementing the

DropdownValueProvider

interface |

| 3 | the second form is a traditional approval form, as seen above |

| 4 | this is a FlowableServiceTask implementation which takes care of establishing the relationship |

3.11. Notifications

Apache Syncope can be instructed to send out notification e-mails when certain events occur.

Every notification generates one or more notification tasks, holding the actual

e-mails to be sent. The tasks are ordinarily scheduled for execution according to the value provided for

notificationjob.cronExpression - see below for details - and can be saved for later

re-execution.

When defining a notification, the following information must be provided:

-

notification template - template for e-mail generation

-

sender - e-mail address appearing in the

Fromfield of the generated e-mail(s) -

subject - text used as e-mail subject

-

recipient e-mail attribute - which user attribute shall be considered as e-mail address for delivery (as users might in principle have different e-mail attributes)

-

recipient(s) - the actual e-mail recipient(s) which can be specified either as:

-

list of static e-mail addresses

-

matching condition to be applied to available users

-

Java class implementing the RecipientsProvider interface

-

-

notification event(s) - event(s) triggering the enclosing notification

-

about - the condition matching Users, Groups or Any Objects which are evaluated for the specified events; for users, the matching entities can be also considered as additional recipients

-

trace level - control how much tracing (including logs and execution details) shall be carried over during execution of the generated notification tasks

3.11.1. Notification Events

Notification (and Audit) events are essentially a means of identifying the invocation of specific methods within the Core, in line with join points in the Aspect Oriented Programming (AOP).

An event is identified by the following five coordinates:

-

type - which can be one of

-

LOGIC -

TASK -

PROPAGATION -

PULL -

PUSH -

CUSTOM

-

-

category - the possible values depend on the selected type: for

LOGICthe Logic components available, forTASKthe various Scheduled Tasks configured, forPROPAGATION,PULLandPUSHthe defined Any Types -

subcategory - completes category with external resource name, when selecting

PROPAGATION,PULLorPUSH -

event type - the final identification of the event; depends on the other coordinates

-

success or failure - whether the current event shall be considered in case of success or failure

The admin console provides tooling to assist with the specification of valid events.

|

An event is uniquely identified by a string of the following form: Some samples:

|

|

Custom events can be used to trigger notifications from non-predefined joint points, as BPMN |

3.11.2. Notification Templates

A notification template is defined as a pair of JEXL expressions, to be used respectively for plaintext and HTML e-mails, and is available for selection in the notification specification.

|

Notification templates can be easily managed via the admin console. |

The full power of JEXL expressions - see reference

and some examples - is available.

For example, the user variable, an instance of

UserTO

with actual value matching the about condition as introduced above, can be used.

Hi ${user.getPlainAttr("firstname").get().values[0]} ${user.getPlainAttr("surname").get().values[0]},

welcome to Syncope!

Your username is ${user.username}.

Your email address is ${user.getPlainAttr("email").get().values[0]}.

Best regards.<html>

<body>

<h3>Hi ${user.getPlainAttr("firstname").get().values[0]} ${user.getPlainAttr("surname").get().values[0]},

welcome to Syncope!</h3>

<p>Your username is ${user.username}.<br/>

Your email address is ${user.getPlainAttr("email").get().values[0]}.</p>

<p>Best regards.</p>

</body>

</html>3.12. Commands

A Command is defined via an Implementation of type COMMAND, providing a Java or Groovy class

for the

Command,

interface, designed to optionally take parameters.

The typical use case is to encapsulate, in a single logical unit, the equivalent of two or more REST calls.

Once defined, Commands can be executed via dedicated REST endpoints, or via Console UI.

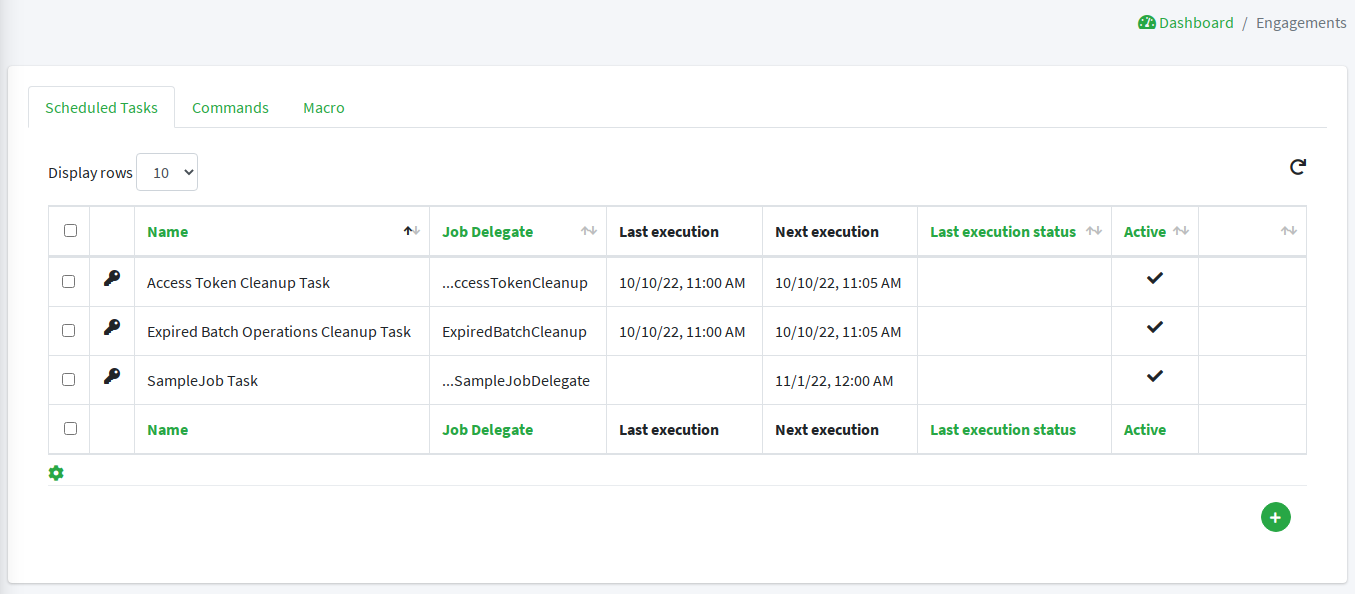

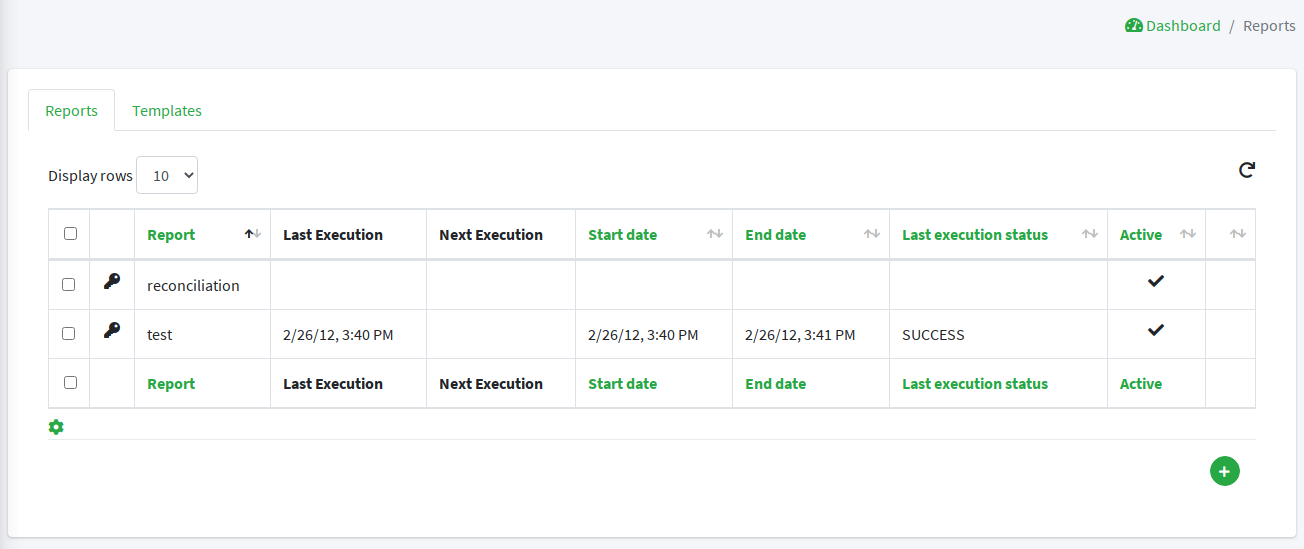

3.13. Tasks

Tasks control the effective operations that are ongoing in the Core.

Whilst tasks define what and how to perform, they are supposed to be run by some entity (depending on the actual task type, see below for details); their execution result can be saved for later examination.

3.13.1. Propagation

A propagation task encapsulates all the information that is required - according to the defined mapping - to create, update or delete a given User, Group or Any Object, to / from a certain Identity Store:

-

operation -

CREATE,UPDATEorDELETE -

connObjectKey - value for ConnId unique identifier on the Identity Store

-

oldConnObjectKey - the former unique identifier on the Identity Store: bears value only during updates involving the unique identifier

-

attributes - set of ConnId attributes built upon internal identity data and configured mapping

-

resource - related external resource

-

objectClass - ConnId object class

-

entity - reference to the internal identity: User, Group or Any Object

|

Propagation tasks are automatically generated via the configured PropagationManager, executed (by default) via the PriorityPropagationTaskExecutor during the propagation process, and are permanently saved - for later re-execution or for examining the execution details - depending on the trace levels set on the related external resource. Automatic retry in case of failure can be configured by mean of a propagation policy, for the related external resource. |

3.13.2. Pull

Pull tasks are required to define and trigger the pull process from Identity Stores.

When defining a pull task, the following information must be provided:

-

related external resource

-

chosen pull mode

-

destination Realm - where entities selected for creation are going to be placed

-

whether creation, update or deletion on internal storage are allowed or not

-

whether remediation is enabled

-

whether to synchronize the status information from the related identity store

-

selected matching and unmatching rules

-

optional pull action(s)

-

scheduling information:

-

when to start

-

|

Pull tasks are executed, either upon request or due to a schedule, via the PullJobDelegate during the pull process, and are permanently saved - for later re-execution or for examining the execution details - depending on the trace level set on the related external resource. |

|

DryRun

It is possible to simulate the execution of a pull (or push) task without performing any actual modification by selecting the DryRun option. The execution results will be still available for examination. |

|

Concurrent Pull Task Executions

By default, pull tasks are set to accept and sequentially process the objects received from the configured External Resource; it is also possible to configure a pull task to work on several objects at once in order to speed up the overall execution time. |

3.13.3. Push

Push tasks are required to define and trigger the push process to Identity Stores.

When defining a push task, the following information must be provided:

-

related external resource

-

source Realm - where entities to push will be read from

-

filter information for selecting which internal entities will be pushed onto the identity store

-

whether creation, update or deletion on the identity store are allowed or not

-

whether to synchronize the status information with internal storage

-

selected matching and unmatching rules

-

optional push action(s)

-

scheduling information:

-

when to start

-

|

Push tasks are executed, either upon request or due to a schedule, via the PushJobDelegate during the push process, and are permanently saved - for later re-execution or for examining the execution details - depending on the trace level set on the related external resource. |

|

Concurrent Push Task Executions

By default, push tasks are set to sequentially send items to the configured External Resource; it is also possible to configure a push task to work on several objects at once in order to speed up the overall execution time. |

3.13.4. Notification

A notification task encapsulates all the information that is required to send out a notification e-mail, according to the specification provided in a given notification:

-

entity - reference to the internal identity - User, Group or Any Object - the notification task refers to

-